Speaking of technology, it is hard not to mention artificial intelligence (AI) – It is omnipresent, omnipotent, and omniscient. AI has already brought a huge amount of value to the society and the infrastructure of different industry verticals is being influenced by AI. AI is the connection between man and machine and is set to be a pillar of future civilization with its life-altering set of technologies. AI has made incredible progress in the past few years and will continue to move into exciting new applications. AI is a very broad topic but the most influential part of AI is machine learning (ML). Further, ML is fueled by deep learning that utilizes artificial neural networks. The investment in these technologies is surging at an exponential rate due to its ability to analyze and interpret large amounts of collected data, through crucial algorithms that reside on or off the cloud, in the most efficient way.

According to Markets and Markets, the artificial intelligence market is expected to reach USD 190.6 billion by 2025, growing at a CAGR of 36.6% (2018-2025), from USD 21.5 billion in 2018. AI/ML is widely adopted across different industry verticals – Automotive, Aerospace, Home & Industrial Automation, Healthcare, Consumer Electronics, Manufacturing, Transport & Logistics, Agriculture, Retail, or Security – as it improves productivity and customer experiences and personalization.

Why demand shifts from cloud-based AI to on-device AI?

The deployment of AI/ML algorithms can be categorized into two categories – Cloud-based and On-device. There are latency, quality, power, security and privacy related issues associated with cloud-based AI systems. Latency is one of the most prominent challenges of cloud-based AI. The round-trip of data causes a delay as uploading data to the cloud has to be carried out every time where AI/ML models reside. And, applications like robot surgeons, autonomous rescue drones, and self-driving cars can’t just have even a microsecond lag when connecting to the cloud as that can affect the outcome completely. In cloud-based AI, the constant data transfer, from edge devices to cloud, requires huge amounts of electricity and power, creating a dual challenge of costs for networking, data transfer, and carbon footprint. Also, there are always privacy and accessibility issues while transferring sensitive data over the cloud and when devices are required to be always online through the internet or cloud connection.

On the contrary, Local AI processing is much faster and reliable as this helps reducing latency by a significant amount as there is no need to connect to the cloud. From a quality perspective as well, things improved significantly. Consider Google Lens as an example – Google has to compress the image and send it to the cloud, purely for bandwidth reasons. And, this will result in a loss in quality. In the case of on-device AI, there would be no need for compressing the image. On-device processing also saves network bandwidth. It is more power-efficient from a macro perspective to process data on-device than to send it to the cloud with the network overhead and then consolidating this with the computer resources in the cloud. Doing processing on devices can improve privacy and accessibility as everything stays on the device itself and no Internet or cloud connection required. Gartner has forecasted that 80% of smartphones on the market will have on-device AI by 2022.

RELATED BLOG

How Qualcomm® Snapdragon™ will get you a competitive advantage in building on-device AI?

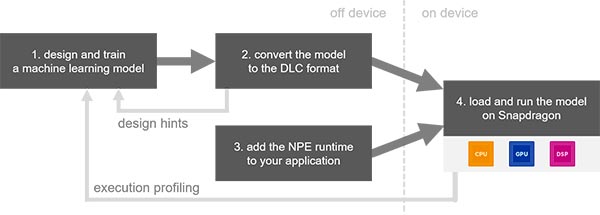

Building more intelligent and smarter experiences for users and industry players has always been the topmost priority of Qualcomm Technologies. Today’s always-on applications have high-speed compute requirements. Qualcomm® Snapdragon™ mobile platforms from 6XX, 7XX, and 8XX series have extensive heterogeneous computing capabilities that can deliver compelling on-device AI-enabled user experiences, with the help of Qualcomm Neural Processing SDK for AI and the Qualcomm AI Engine. SDK for AI is not adding any new library to network layers and at the same time, it enables designers to optimize the performance of trained neural networks on devices with Snapdragons with lesser efforts, whether that is the CPU, GPU or DSP. AI Engine in Snapdragons has the capacity for carrying out tasks with impressive efficacy on AI models. The workflow looks as follows:

Snapdragon™ core hardware architectures are engineered to run AI/ML applications locally in a quick and efficient way. Inference workloads can directly be run on AI Engine in the latest Qualcomm® Snapdragon™ 855 mobile platform that can perform up to 7 trillion calculations per second. Want an illustration? Check this video in which Mr. Enrico Ros, Product Manager-AI at Qualcomm®, tested AI object classification application that can detect multiple objects at a time running on SD855 and utilizing Qualcomm Neural Processing SDK for AI. Another Proof point – A visual search product from Google – Google Lens, a tremendous case of on-device AI utilization.

Conclusion

Machine learning and data processing in the cloud won’t go away, but on-device AI is what is making connected devices smarter and faster and will always have the upper hand over cloud-powered AI systems. Tech giants like Qualcomm® are transforming the AI/ML industry with on-device AI innovations through its Qualcomm Neural Processing SDK for AI and the Qualcomm AI Engine on Snapdragon™ processors.

Being an authorized Snapdragon™ Technology Partner and a Qualcomm® Licensee, eInfochips has early access to various Snapdragon™ platforms and other technologies, which gives a competitive advantage in developing innovative products. We also offer engineering services leveraging the comprehensive expertise and intricate understanding of Qualcomm® Snapdragon™ 855, 845, 835, 820, 624, 660, 410, 212 processors for efficient and high performance designs. To know more, get in touch with us.