Introduction

Did you know that in modern AI (Artificial Intelligence) applications, RAG (Retrieval-Augmented Generation) is revolutionizing the way we interact with artificial intelligence? By combining the power of large language models with real-time authoritative knowledge, RAG ensures accuracy, relevance, and transparency in AI-generated responses. But what impact does this innovation have on shaping the future of AI? How does Azure Infrastructure play a crucial role in its development?

As we delve deeper into the world of generative AI, we find ourselves at the forefront of a technological revolution. These advanced systems can create text, images, and even code, pushing the boundaries of what we thought possible in human-machine interaction. From enhancing creative processes to solving complex problems, generative AI is transforming industries and opening new avenues for innovation.

RAG (Retrieval-Augmented Generation)

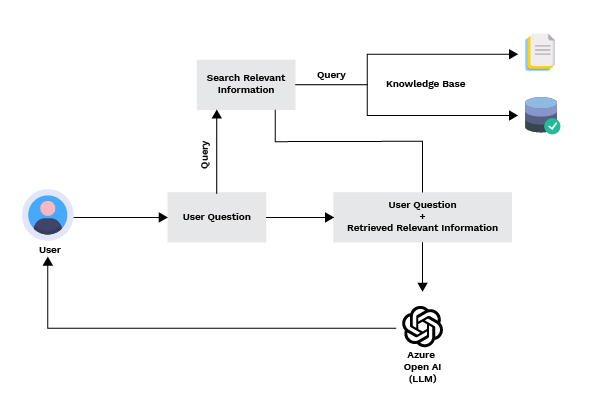

Retrieval-Augmented Generation is a natural language processing (NLP) model architecture that combines retrieval and generation functions. It combines a retrieval technique with a generative language model, typically a transformer architecture version like GPT.

The retrieval component of RAG extracts relevant information from extensive texts in response to specific queries or prompts. This retrieved data is then used to enhance the quality and relevance of the language model’s generated responses.

The combination of retrieval and generation in RAG enables the model to produce more logical and contextually relevant outputs by utilizing information from external knowledge sources. This architecture demonstrated promising results in a wide range of NLP applications, including question answering, text summarization, and dialogue generation.

One real-life application of Retrieval-Augmented Generation (RAG) is in the field of virtual assistants, such as chatbots or voice assistants.

Imagine a scenario where you’re chatting with a virtual assistant to plan a trip. With traditional methods, the assistant might generate responses based solely on pre-programmed rules or a limited set of data.

However, with RAG, the assistant can retrieve relevant information from vast databases, forums, or travel websites, providing more accurate and up-to-date recommendations. For instance, if you ask about the best local restaurants in a particular city, RAG can search through a wealth of online reviews and ratings, then generate responses tailored to your preferences. This integration of retrieval and generation enables the virtual assistant to offer more personalized and informative suggestions, enhancing the user experience significantly.

RAG functions in two stages: retrieval and content generation.

During the retrieval phase, algorithms examine external knowledge bases for relevant information based on the user’s search. This abundance of information is smoothly incorporated into the generative phase, allowing large language models (LLMs) to create personalized responses tailored to user’s needs. This “open-book” approach improves user experiences and allows LLMs to address complex questions with precision and depth. Now let’s look at how we can utilize the RAG with Azure infrastructure. Here, we shall explore the following techniques of implementation:-

- Azure AI Enrichments

- Azure ML’s Prompt Flow

- Azure Open AI on Your Data

- Azure Component-Based Custom Solution

Azure AI Enrichments

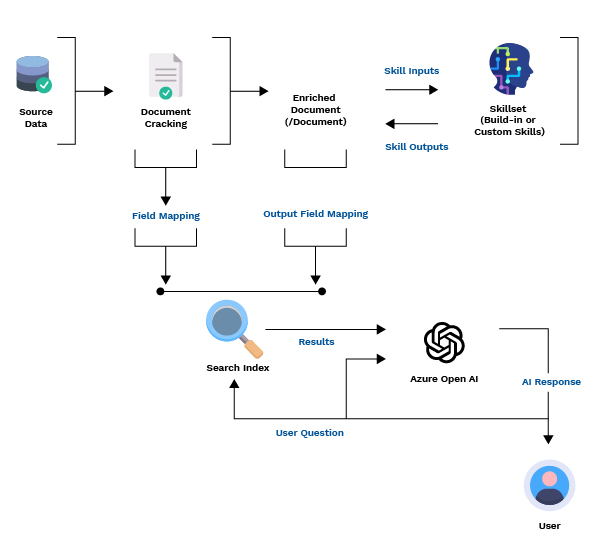

In scenario one, where our knowledge base requires less frequent updates and the number of knowledge sources is limited, Azure AI Enrichments can be utilized. Initially, a Data Source is created, accepting various sources such as Azure Blob Storage, Azure Data Lake Storage Gen2, Azure SQL Database, Azure Table Storage, and Azure Cosmos DB, where the knowledge base is stored. Subsequently, a skillset is established, serving as a reusable resource in Azure AI Search, linked to an indexer. This skillset comprises multiple skills that invoke built-in AI or external custom processing on documents fetched from the data source.

FIG 1.1 Ref :– Skillset concepts – Azure AI Search | Microsoft Learn

During skillset processing, from initiation to completion, skills interact with enriched documents. Initially, an enriched document contains raw content extracted from the data source. Through each skill execution, the enriched document evolves, acquiring structure and depth as each skill outputs its results as nodes in a graph. Upon completion of skillset execution, the enriched document’s output is directed to an index through output field mappings. Any raw content intended for direct transfer from the source to the index is defined through these field mappings.

To configure enrichment, settings are specified within both the skillset and indexer. After successfully ingesting and converting documents into indexes, user queries are processed. First, the question is searched within the Azure Search index, and then both search results and question are sent to Azure Open AI to generate a response for the user.

Azure ML’s Prompt flow

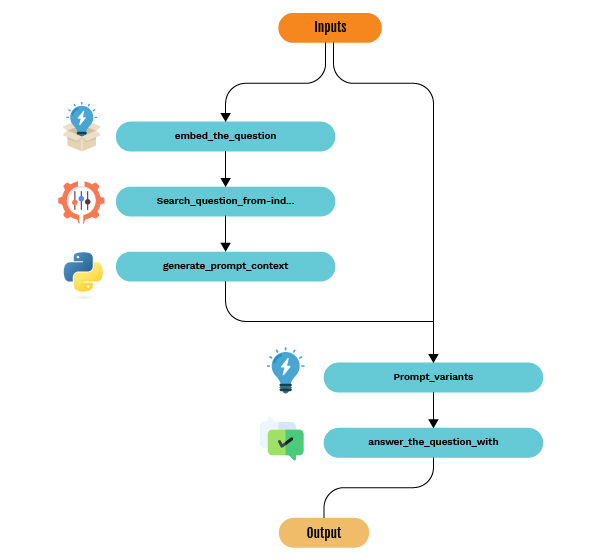

In this context, a prompt serves as input—a text command or question given to an AI model to produce desired output such as content or answers. Crafting effective and efficient prompts is called prompt design or engineering. Prompt flow is the interactive editor within Azure Machine Learning tailored for prompt engineering projects. To commence, one can generate a prompt flow sample, utilizing RAG from the sample’s gallery in Azure Machine Learning. This sample serves as a learning tool for employing Vector Index within a prompt flow.

FIG 1.2 Ref :– Get started with RAG using a prompt flow sample (preview) – Azure Machine Learning | Microsoft Learn

An illustrative architecture achievable through Azure ML’s Prompt flow is presented here. The primary advantage lies in the seamless integration of custom models—whether they are created for embedding, constructing search indexes, or customized LLMs. Azure Infrastructure facilitates this connection, enabling efficient utilization of the created models within the prompt flow environment.

Azure Open AI on your Data

If you prefer a hassle-free approach and seek a low-code option for retrieval’s, consider utilizing a consumable API (Application Programming Interface) provided by Azure Open AI, tailored to restrict responses to your specific data based on a search index. Its configuration facilitates seamless integration with your Azure Search index, allowing you to interact with Azure Open AI and receive responses tailored to your specific data. By providing the URL (Uniform Resource Locator) and key of your Azure Search index where your segmented data resides, you can access the “Azure OpenAI On Your Data” feature. This functionality allows you to leverage advanced AI models such as GPT-35-Turbo and GPT-4 on your enterprise data without the necessity of model training or fine-tuning.

Through this service, you can engage in chat interactions and analyze your data with enhanced accuracy. Moreover, you have the flexibility to specify data sources (citation) to support responses based on the latest information available within your designated data sources. Access to Azure OpenAI On Your Data is facilitated through a REST API, SDK, or web-based interface within the Azure OpenAI Studio. Additionally, you can create a web application that connects to your data, enabling an enhanced chat solution, or deploy it directly as a copilot in the Copilot Studio (preview).

Azure Component-Based Custom Solutions

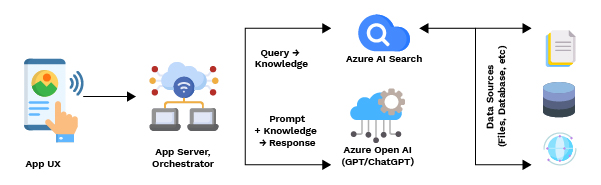

In addition, you have the flexibility to utilize each component individually to construct your own customized solutions. A high-level summary of the pattern follows this sequence :-

– Commence with a user query or request (prompt).

– Direct it to Azure AI Search to locate relevant information.

– Transmit the top-ranked search results to the LLM.

Utilize the natural language understanding and reasoning capabilities of the LLM to formulate a response to the initial prompt.

Azure AI Search furnishes inputs to the LLM prompt, without engaging in model training. In the RAG architecture, there’s no supplementary training involved. The LLM is pre-trained using public data, generating responses enriched by information from the retriever.

RAG patterns incorporating Azure AI Search comprise the following elements:

FIG 1.3 Ref: – RAG and generative AI – Azure AI Search | Microsoft Learn

Architecture diagram depicting information retrieval with search and ChatGPT.

– App UX (web app) for user experience

– App server or orchestrator (integration and coordination layer)

– Azure AI Search (information retrieval system)

– Azure OpenAI (LLM for generative AI)

The web app delivers the user experience, encompassing presentation, context, and user interaction. User queries or prompts originate here. Inputs traverse through the integration layer, initially directed towards information retrieval to obtain search results, while also being relayed to the LLM to establish context and intent.

The app server or orchestrator serves as the integration code coordinating handoffs between information retrieval and the LLM. LangChain is a powerful open-source framework designed to facilitate the development of applications using large language models (LLMs). It offers an effective solution for orchestrating workflows and can seamlessly integrate with Azure AI Search, allowing it to function as a retriever within your workflow.

The information retrieval system furnishes the searchable index, query logic, and payload (query response). The search index may comprise vectors or non-vector content. While many samples and demos feature vector fields, they are not mandatory. Queries are executed utilizing the existing search engine in Azure AI Search, adept at handling keyword (or term) and vector queries. The index is pre-established based on a schema you define, loaded with content sourced from files, databases, or storage.

The LLM receives the original prompt alongside results from Azure AI Search. Analyzing the results, the LLM generates a response. In the case of ChatGPT, user interaction often entails a conversational exchange. Alternatively, using Davinci, the prompt might yield a fully composed answer. Although an Azure solution commonly leverages Azure OpenAI, there’s no strict dependency on this specific service.

Azure AI Search does not inherently integrate LLMs, web frontends, or provide vector encoding (embeddings) out of the box, necessitating custom code to manage these aspects of the solution.

Conclusion

Azure infrastructure offers various methods for implementing Retrieval-Augmented Generation (RAG) depending on specific needs. Azure AI Enrichments are suitable for infrequently updated knowledge bases, while Azure ML’s Prompt Flow is ideal for prompt engineering projects. Azure Open AI on Your Data provides a hassle-free, low-code option for tailored responses, and Azure Component-Based Custom Solutions offer flexibility for constructing personalized solutions. The choice depends on factors such as update frequency and customization requirements, ensuring efficient RAG implementation within the Azure ecosystem. Azure enables developers and businesses to use RAG to build targeted, powerful AI solutions. As we navigate this field of innovation, the cooperation between RAG and Azure infrastructure propels us into a future where AI-driven experiences are not just intelligent but also deeply informed and trustworthy