Applications that are prepared for production require orchestrators, particularly if they are deployed across several containers or are built on microservices. Each microservice in a microservice-based architecture is made to be independent, with its own data and model. Independent development and deployment are made easier by this autonomy. Nonetheless, even conventional systems that are made up of several services, like those that adhere to a Service-Oriented Architecture (SOA), are made up of several containers or services that come together to create a single business application. It is necessary to install these apps as a distributed system. These systems may present difficulties when scaling and managing, which is why an orchestrator is essential. Your multi-container application will be scalable and production-ready thanks to an orchestrator.

Deployment units

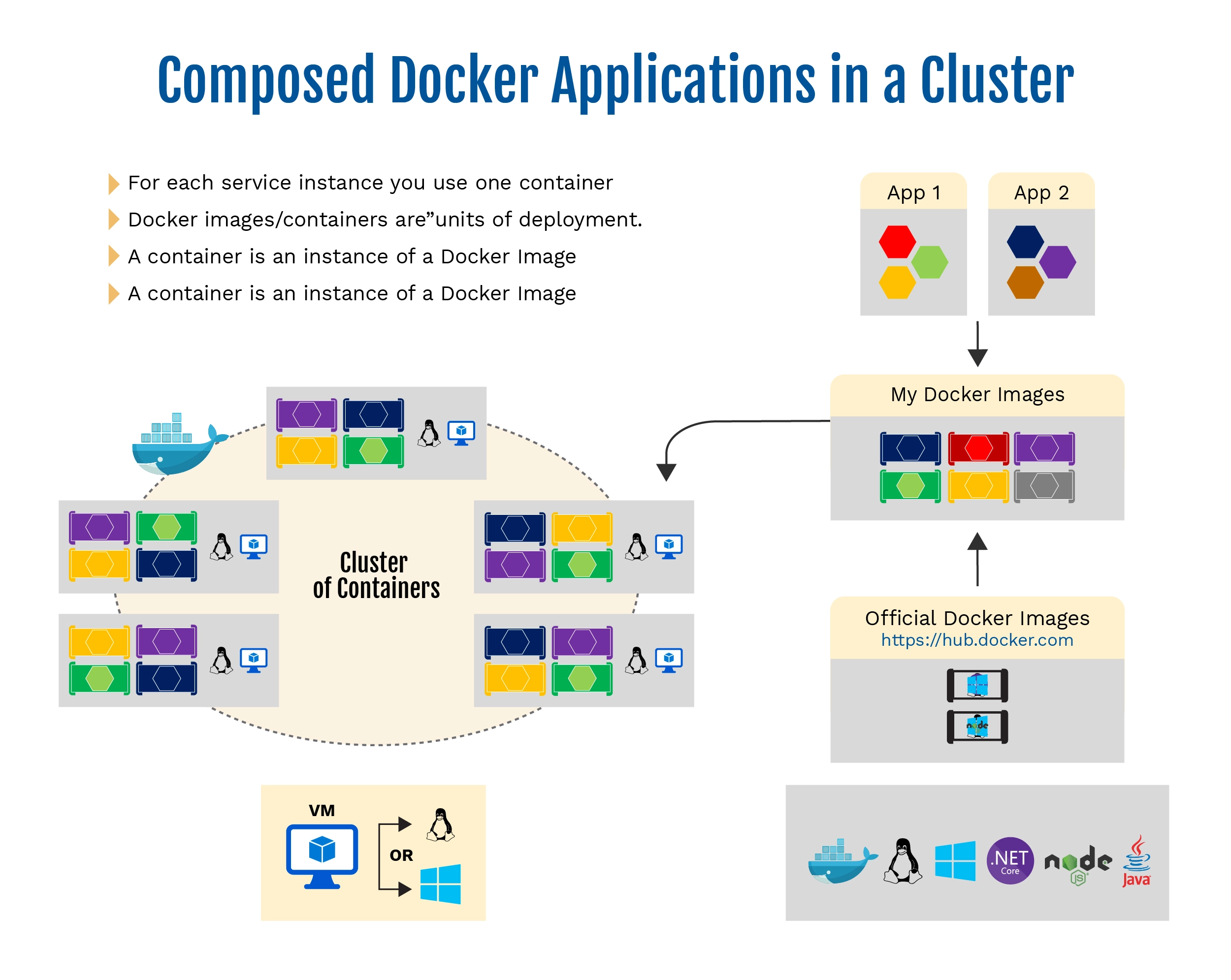

Using Docker containers has become commonplace in the software deployment industry. A single container encapsulates an instance of the service. Each container corresponds to an instance of a Docker image, referred to as “units of deployment.” This configuration offers a sensible and effective deployment method since it is controlled by a host that can accommodate several of these containers.

However, there are several issues with this strategy. One of the most important ones is the management of these assembled apps in terms of their load-balancing, routing, and orchestration. These issues must be tackled to ensure the system runs efficiently. Else the complications brought up by these issues could outweigh the advantages of adopting Docker containers . Therefore, when deploying applications utilizing Docker containers, it is essential to have a well-thought-out approach for handling load-balancing, routing, and orchestration.

When used on a single Docker host, the Docker Engine can effectively handle a single image instance on a single host. Nevertheless, it has drawbacks when it comes to handling several containers spread over several hosts, especially for the more intricately distributed applications. In many cases, it becomes clear that a robust solution is required. A management platform is useful in this situation. Containers with numerous instances per image can be scaled out and started automatically by such a platform. Additionally, it can suspend or shut down containers as needed. It should ideally also regulate the access of the containers to resources like data storage and the network. For sophisticated, distributed applications that are spread over several servers to be managed effectively, this degree of automation and control is essential.

Using orchestration and clustering platforms is essential to get beyond the administration of single containers or basic assembled applications and toward larger business applications with microservices.

Understanding the platforms and solutions that support advanced scenarios is essential from an architectural and developmental standpoint when building huge organizations made up of microservices-based apps.

Orchestrators and Clusters

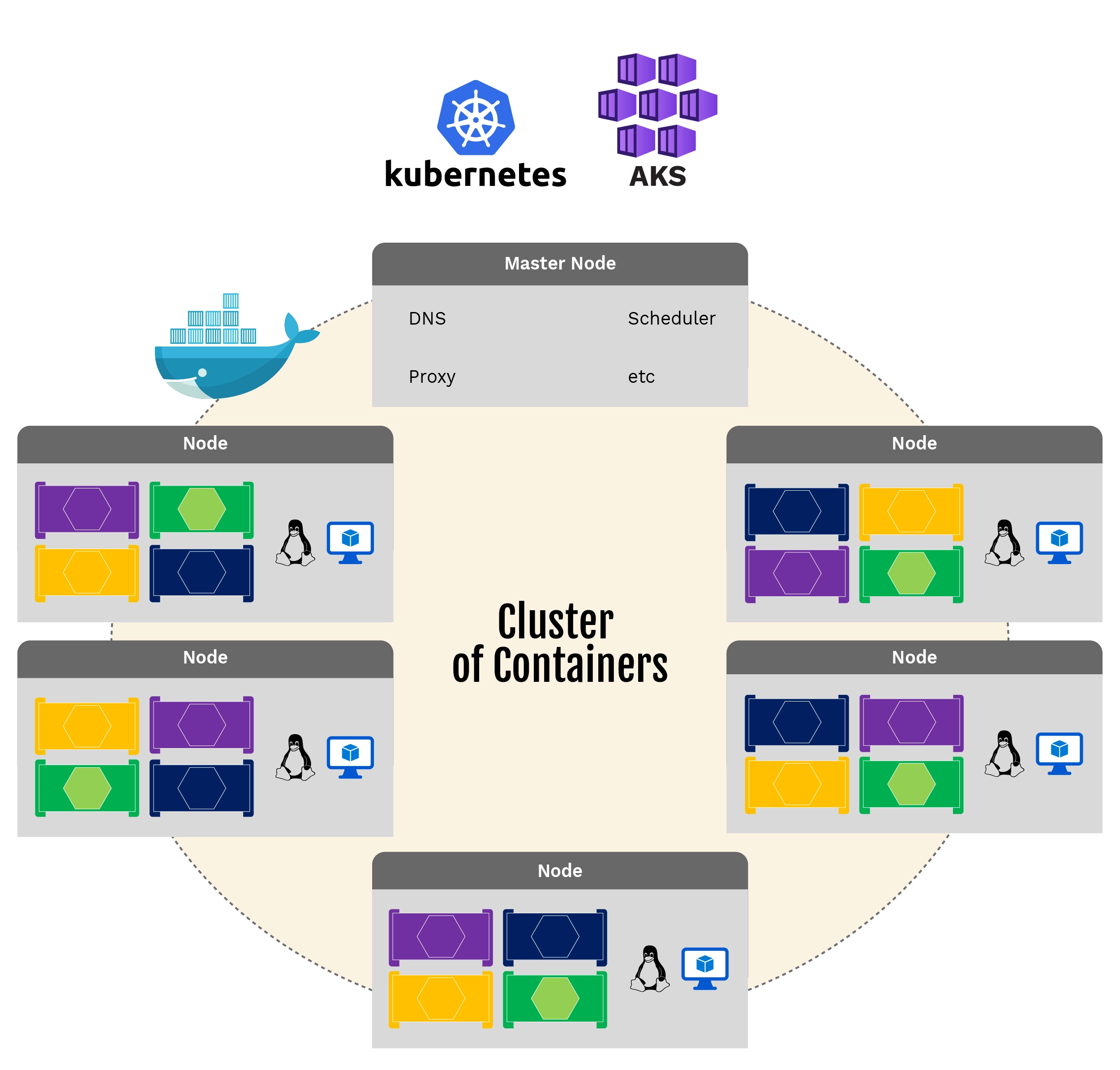

Managing all the Docker servers as a single cluster becomes crucial when scaling out applications across multiple hosts, like in the case of a big microservice-based application. The intricacy of the underlying platform is abstracted by this method. Orchestrators and container clusters are useful in this situation. One such orchestrator is Kubernetes, which is accessible in Azure via the Azure Kubernetes Service.

Schedulers

Schedulers play a crucial role in container orchestration. They provide administrators the ability, to start containers in a cluster, frequently via a user interface. A cluster scheduler has several important duties, including making effective use of the cluster’s resources, following user-imposed restrictions, load-balancing containers between nodes or hosts, and remaining error-resistant while guaranteeing high availability.

Due to the inherent connections between the ideas of a cluster and a scheduler, numerous companies provide products that include both features. The following list highlights the most important software and platform options for schedulers and clusters. These orchestrators are usually available on public clouds like Azure.

Software systems for scheduling, orchestration, and container clustering

| Platform | Description |

| Kubernetes

|

The open-source program Kubernetes has many features, including orchestration, container scheduling, and cluster infrastructure. It makes it possible to automate application container operations, scaling, and deployment across host clusters. By organizing application containers into logical units for easier management and discovery, Kubernetes offers a container-centric architecture. Although Kubernetes is more developed on Linux, it is still in its infancy on Windows.

|

| Azure Kubernetes Service (AKS)

|

Azure offers a managed container orchestration solution called Azure Kubernetes solution (AKS) that makes managing, deploying, and operating Kubernetes clusters easier.

|

| Azure Container Apps

|

The goal of Azure Container Apps, a serverless container service under Azure management, is to make it easier to develop and implement contemporary apps at scale.

|

A Complete Guide to Using Container-Based Orchestrators in Microsoft Azure

Docker containers, Docker clusters, and orchestration are supported by a wide range of cloud providers, such as Google Container Engine, Amazon EC2 Container Service, and Microsoft Azure. Microsoft Azure’s Azure Kubernetes Service (AKS) expands support for Docker clusters and orchestrators.

Using Azure Kubernetes Service to Increase Efficiency: A Realistic Method

Several Docker hosts are combined into a single virtual Docker host via a Kubernetes cluster. This makes it possible to scale out with an arbitrary number of container instances and to deploy numerous containers into the cluster. The cluster assumes the duty of managing all the complex management elements, including health and scalability.

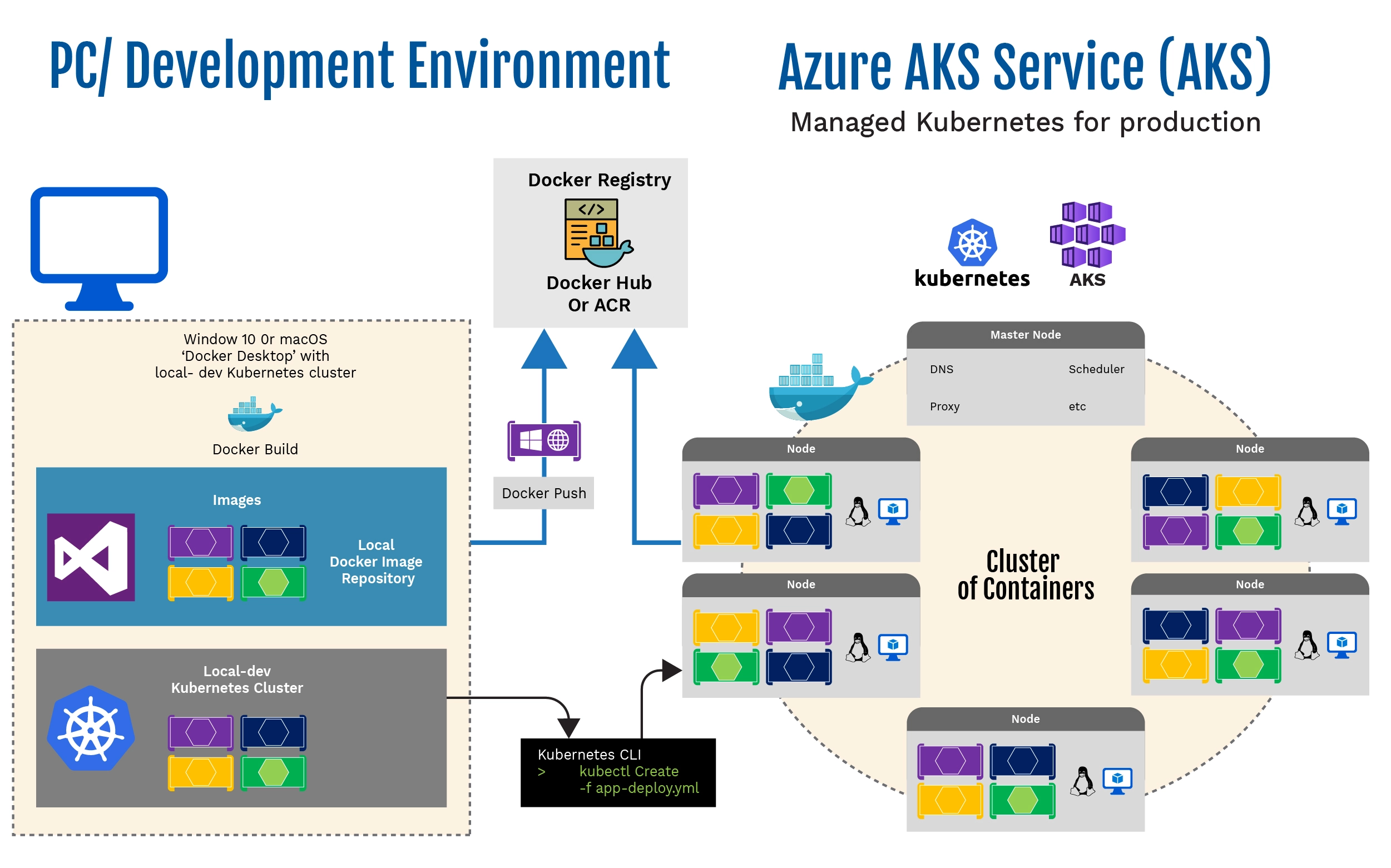

An efficient way to create, configure, and manage a cluster of virtual machines in Azure that are ready to execute containerized apps is with Azure Kubernetes Service (AKS). AKS enables the deployment and management of container-based apps on Microsoft Azure by leveraging the current experience or drawing from a large and growing pool of community knowledge through an optimized setup of popular open-source scheduling and orchestration tools.

The popular Docker clustering open-source tools and technologies are configured especially for Azure by Azure Kubernetes Service (AKS). This offers an open approach that guarantees mobility for the application setup as well as the containers. It frees up time to select the orchestrator tools, size, and number of hosts while AKS handles the rest.

In a Kubernetes cluster, most of the cluster’s coordination is managed by a master node, which is a virtual machine (VM). From an application perspective, the remaining nodes are handled as a single pool, to which one can deploy containers. One can scale up to many containers with this system, potentially reaching several thousand.

Creating a Successful Kubernetes Development Environment: A Comprehensive Guide

In July 2018, Docker announced that Kubernetes may now run in the development environment on a single development computer (Windows 10 or macOS) by installing Docker Desktop. As seen in the following picture, this configuration enables a later deployment to the cloud (AKS) for additional integration testing.

Getting Started with Azure Kubernetes Service (AKS): An Introduction

It is necessary to deploy an AKS cluster using the Command Line Interface (CLI) or the Azure portal to begin using Azure Kubernetes Service (AKS).

(See the “Deploy an Azure Kubernetes Service (AKS) cluster” tutorial for further details on how to set up a Kubernetes cluster in Azure.)

The software that is installed by default on Azure Kubernetes Service (AKS) is free of charge. Open-source software is used to implement all default settings. AKS can be used with many Azure virtual machines. Only the compute instances chosen and the use of other underlying infrastructure resources, including networking and storage, are subject to fees. Crucially, the use of AKS itself is free of further fees.

Helm Charts for Effective Deployment into Kubernetes Clusters: A Comprehensive Guide

Applications in the native format (.yaml files) can be deployed to a Kubernetes cluster using the original kubectl.exe CLI tool. Helm is advised for building more sophisticated Kubernetes applications, such as intricate microservice-based applications.

Even with the most complex Kubernetes applications, Helm Charts help you define, version, install, share, upgrade, or roll back.

Adoption of Helm is advised as it integrates with other Kubernetes environments in Azure, such as Azure Dev Spaces, which are also built on Helm charts.

The Cloud Native Computing Foundation (CNCF) supports Helm in partnership with Google, Bitnami, Microsoft, and other major IT companies, as well as the Helm contributor community.

Extra sources