eInfochips is a Specialty OEM Partner on the NVIDIA Design Network. They offer CUDA Consulting, Migration and System Design Services for companies looking to use NVIDIA GPUs for their products. This blog is from eInfochips, and elaborates the performance improvement they achieved using the NVIDIA Tesla K40 for Seismic Data Processing.

Seismic Data Processing

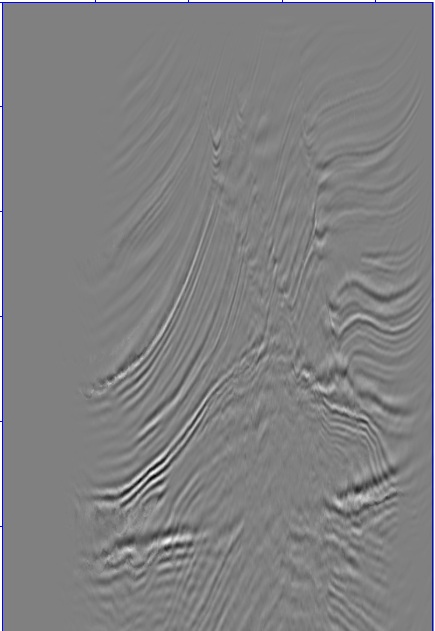

Seismic processing is important part of Oil and Gas exploration workflow for good quality subsurface imaging. It is used by seismologists to process the acquired data into an image that can be used to infer the sub-surface structure. Sound waves are directed toward the Earth, and receiver (or geophones) equipment is used to listen to the echoes. The sound data collected from receiver is in the range of petabytes, and often takes weeks to process. The output is an image of underground map by computer programs and then sub-surface structure can be interpreted by specialists.

Kirchhoff pre-stack depth migration is one of the most commonly used tools for processing seismic data. It estimates travel times from a velocity model using ray tracing method, so velocity model is required with this method. It is efficient to image steep dips with turning waves. Source code for this algorithm is taken form Seismic UNIX (SU).

GPU in Oil & Gas Exploration

Oil and Gas explorations are required to process large chunks of raw data samples, and it takes a very long time to conclude on the probability of finding oil on a given project. Such large chunks of data can be demodulated / processed in parallel with the maximum number of memory bandwidth operations to reduce the processing time by some factor. Here we highlight the role that a GPU can play in Oil and Gas Exploration. Analysis of these observations may need a few days of processing using a standard CPU. A GPU can accelerate this activity and can complete the processing in a matter of hours!

Results

The porting process started with the application performance measurement on CPU. For processing 74 MB (23040 traces) of seismic data on Intel Corei7 based CPU it took around 7 minutes and 28 seconds. The following table illustrates application execution performance improvement at each explained steps as above.

Achieved performance with GPU accelerated version as follows.

| Number of Traces | Execution Time | |

| CPU (Intel Core i7-2600 @3.40GHz-8) | 23040 | 448 |

| CPU (OpenMP Version) | 23040 | 160 |

| GPU (Tesla K40) | 23040 | 6.4 |

The steps that enabled this performance improvement, in a staged manner are given below.

| Steps | Execution Time (Seconds) | Performance Factor |

| CPU execution time | 448 | 1X |

| Basic algorithm porting | 92 | 4.8X |

| Register usage optimization | 75 | 5.9X |

| GPU Boost | 72 | 6.2X |

| Increase Occupancy | 66 | 6.7X |

| Improve usage of L1 Cache | 24 | 18X |

| Concurrent kernel execution | 9 | 49X |

| Increase Memory Bandwidth | 7.3 | 61X |

| Use Fast Math Option | 6.6 | 67X |

The total CPU code size that is converted to GPU is 190 lines. And the total C code size is 980 lines. In comparison with the C code size, 20% of code is added to port the application to GPU to achieve 25X performance. A partial output image of the algorithm implementation is given here

Conclusion

Using NVIDIA CUDA programming and best practices, we are able to port Kirchhoff depth migration algorithm applications to GPU in a short period of time to achieved 25x improvement in execution performance. eInfochips is a Specialty OEM Partner on the NVIDIA Design Network and we offer CUDA Consulting, Migration and System Design Services for companies looking to use NVIDIA GPUs for their products. To estimate the performance benefit for your algorithm or application, please write to marketing@einfochips.com.