Introduction:

Autonomous Mobile Robots (AMRs) are redefining industries, from logistics to healthcare, by offering intelligent and adaptable solutions for complex navigation tasks. Imagine a robot that can seamlessly navigate dynamic environments, integrating the precision of LiDAR and the versatility of cameras to perceive and understand its surroundings. What sets this system apart is an innovative localization algorithm designed to elevate its spatial awareness and decision-making capabilities.

The need for precise navigation algorithms arises from the challenges posed by inaccurate localization of AMRs. These inaccuracies are caused by factors such as noisy or unreliable sensor data (e.g., from LiDAR, cameras, or IMUs), dynamic environments with changing layouts or obstacles, weak sensor fusion, and reliance on outdated or static maps. Environmental conditions, including poor lighting, reflections, or signal interference (e.g., from GPS or Wi-Fi), further degrade localization accuracy, emphasizing the importance of robust and adaptive algorithms.

One compelling example of addressing the precise navigation challenge is an AMR equipped with an Intel RealSense camera and LiDAR, designed to autonomously detect an object, and precisely orient itself to a fixed position and orientation. This blog delves into how AMR can reorient itself into the precise floor’s location with the help of computer vision and AI algorithms. At the core of this innovation lies YOLOv8, an object detection model.

This blog briefly explains a unique AMR reorientation pipeline with the mixture of ROS2 navigation stack and our object tracking algorithm. End results can be extremely useful for various applications like localisation, auto docking, industrial automation, and accurate pick and place.

What Makes AMR Reorientation Crucial?

AMR uses sensors (cameras, LiDAR, IMUs, etc.) to fathom their precise position and orientation in relation to the surroundings when navigating autonomously. Slippage, sensor errors, or obstructions may cause the robot to deviate from its intended path over time. The reorientation allows the robot to adjust its direction, correct its trajectory, and assure efficient acceleration towards its destination. Without realignment, the robot will either drift off-track or fail to achieve its planned goal.

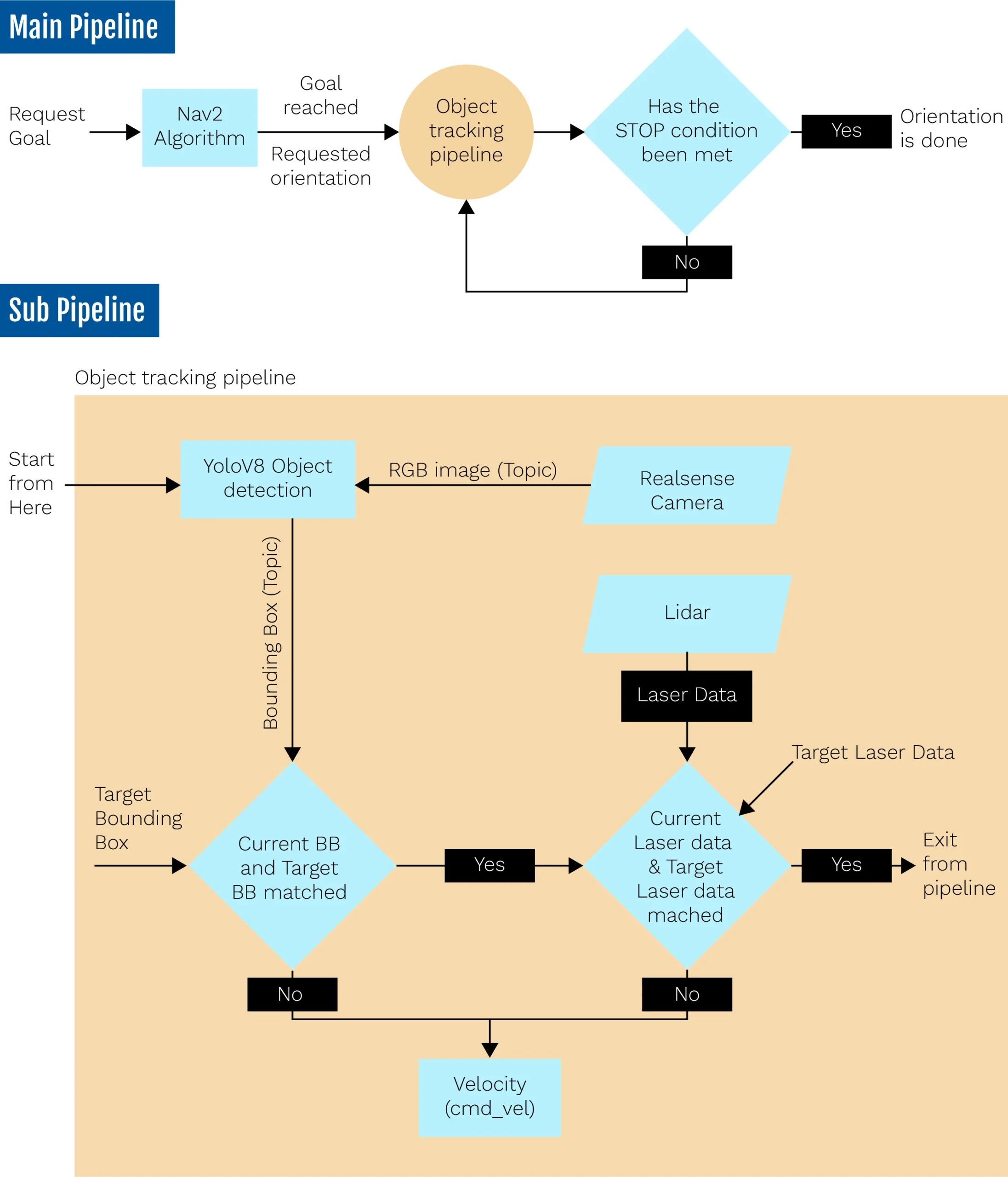

Flow-diagram:

The algorithm follows a systematic pipeline to enable precise navigation and alignment of the AMR with its target object. The figure shown below is a breakdown of the process.

Flow-diagram of entire pipeline

- Main Pipeline for Navigation:

The process starts with the Nav2 algorithm, which handles navigation by receiving a goal and guiding the AMR toward the destination. Once the navigation is complete, the AMR transitions to orientation and object tracking, continuously tracking the target object (e.g., a mug) using sensory inputs from an RGB camera and object detection capabilities.

- Object Tracking with Bounding Box (sub-pipeline):

The YOLOv8 object detection model identifies the target object within the camera’s field of view, generating a bounding box to determine the object’s position in the camera’s frame of reference. This data is used to guide the AMR to a specified distance from the object. By comparing current bounding box data with predefined target values, the AMR calculates the object’s relative distance and angle, adjusting its movements via the cmd_vel topic.

- LiDAR-Based Fine-Tuning (sub-pipeline):

After meeting the bounding box-based stopping condition, the AMR switches to verifying alignment using the LiDAR data. The LiDAR measures the distance between the AMR and surfaces nearby, to ensure precise positioning. If the current laser data matches the predefined target data, the alignment process is complete. In cases where the AMR struggles to reach the target position or takes too long, LiDAR provides laser scan data for lateral adjustments, correcting sideways errors and ensuring accurate alignment.

At the start of the process, the AMR measures two critical parameters: the distance to the target object using YOLO’s bounding box data from the RGB camera and the distance to a surface close by using the LiDAR sensor data. These initial measurements are stored as the reference values for subsequent use. Once the AMR reaches its navigation goal, the tracking algorithm is activated to fine-tune its position and orientation relative to the target object.

The algorithm first focuses on YOLO’s bounding box data to align the AMR with the object. Based on the current bounding box information, the AMR adjusts its lateral (left or right) position to match the object’s horizontal alignment with the stored reference. Following this, it moves forward or backward to align the size of the bounding box (height or width) with the stored dimensions, ensuring the object is at the correct distance. When the bounding box dimensions are within an acceptable tolerance range, the algorithm shifts its focus to LiDAR data.

Using LiDAR measurements, the AMR fine-tunes its alignment by comparing the current distance to the surface which is on the left or right side of the AMR, with the reference LiDAR data. The LiDAR ensures precise positioning by enabling lateral adjustments and verifying the AMR’s distance from the surface. However, throughout this process, the AMR continuously monitors bounding box data. If a significant deviation is detected in the bounding box alignment (e.g., due to slight shifts in position or sensor inaccuracies), the algorithm temporarily switches back to bounding box matching to correct the object’s alignment before returning to the LiDAR-based fine-tuning.

This iterative approach between YOLO bounding box data and LiDAR measurements ensures the AMR achieves precise alignment and positioning relative to the object and its environment, even in dynamic scenarios.

Nav2:

The Navigation 2 (Nav2) library in ROS 2 is a versatile framework that facilitates autonomous navigation for mobile robots. It provides essential tools for path planning, goal execution, and obstacle avoidance in both known and dynamic environments. In this use case, Nav2 handles high-level navigation, guiding the AMR to its target location by leveraging map data and sensor inputs such as LiDAR.

While Nav2 excels in managing diverse navigation scenarios, sensor limitations and algorithmic constraints can result in minor inaccuracies, leaving the AMR slightly offset from the desired target position. These deviations are addressed by activating the object-tracking pipeline for fine-tuning alignment and orientation.

YOLOv8:

YOLO (You Only Look Once) is an innovative object detection model renowned for its high-speed and accurate performance. In our use-case, YOLOv8 is integral to enabling the AMR to detect and track a mug in real time. By providing spatial information from the RealSense camera, YOLOv8 facilitates alignment and orientation processes for object tracking algorithm, enabling the robot to interact intelligently with its surroundings. This real-time detection capability ensures the AMR can dynamically adjust its position relative to the target object, enhancing its functionality beyond basic navigation.

A key strength of YOLOv8 lies in its adaptability and unified architecture, which simultaneously predicts bounding box coordinates and class probabilities. While the current use-case focuses on detecting a mug, YOLOv8’s versatility allows it to be trained for a wide range of objects, making it suitable for applications in diverse industries. Its reliability across varying lighting conditions and environments further amplifies AMR’s operational flexibility.

LiDAR:

LiDAR (Light Detection and Ranging) is a vital sensor technology in autonomous mobile robots (AMRs), providing precise distance measurements and spatial awareness that enhance navigation and object interaction capabilities. In the context of this blog’s use case, LiDAR complements the AMR’s RealSense camera and YOLOv8 object detection model by enabling fine-tuned positioning and orientation, particularly when navigating close to the target object.

The integration of LiDAR allows the AMR to calculate its relative position to the object with remarkable accuracy. For instance, once the mug is detected, the AMR uses LiDAR to measure the distance to flat surfaces near the object, helping it adjust its alignment. These adjustments are performed through iterative velocity commands (cmd_vel), allowing the AMR to move incrementally left, right, forward, or backward until the correct orientation and distance are achieved. This combination of LiDAR’s precision and YOLOv8’s object recognition ensures a seamless approach to the object.

Experiments:

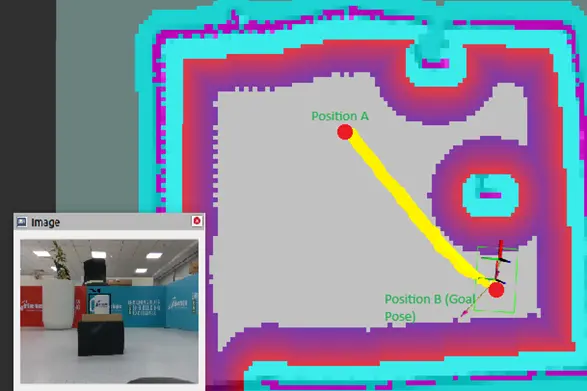

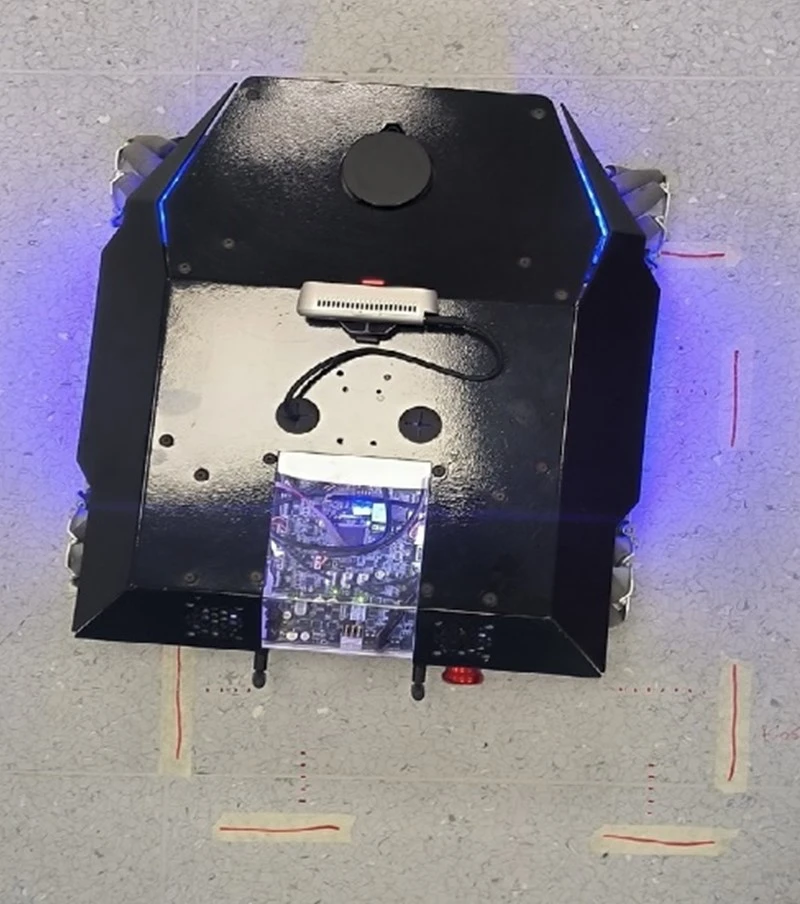

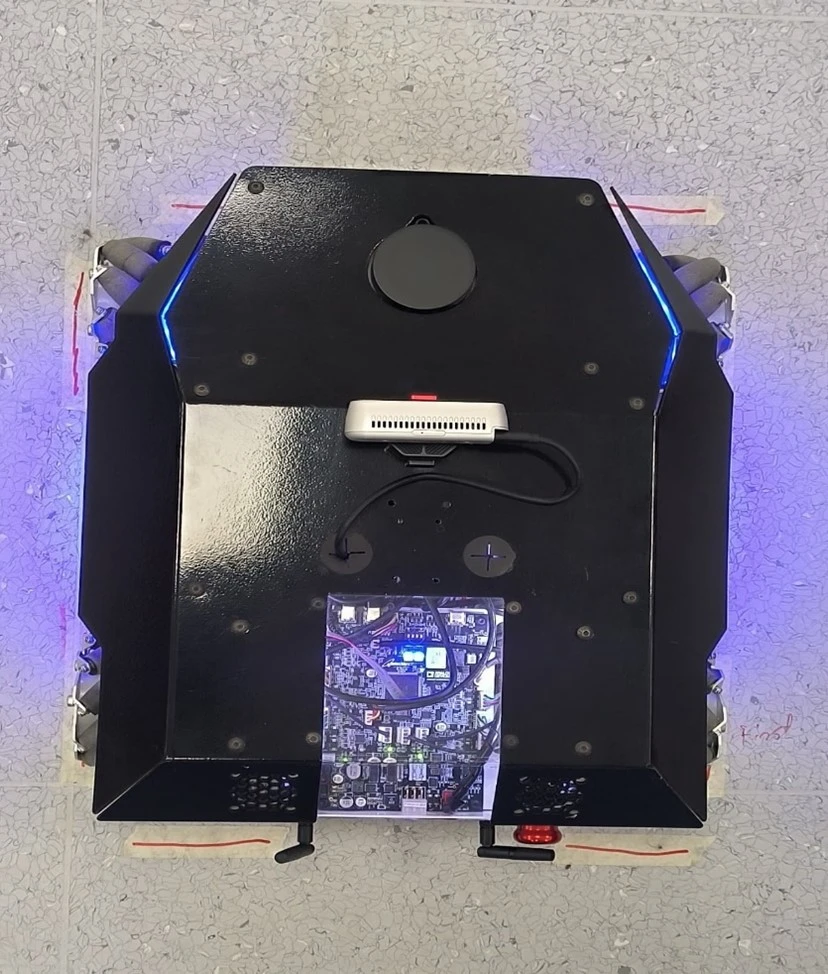

This section provides glimpse of how AMR orientation gets enhanced by using the object tracking pipeline. You will see a screenshots of AMR home square position marked on floor, a RVIZ2 which is a ROS2 visualizer, and camera feed detecting a mug and its bounding box.

Figure (a) shows that the Goal was requested using the nav2 algorithm, which guided the AMR from its starting point, A, to the intended destination, point B. When the AMR reached Position B, it experienced substantial positional drift, due to the intrinsic drift in its movement and the tolerance limitations established by the nav2 algorithm. This divergence prohibited the robot from perfectly aligning with its intended objective. The disparity in the AMR’s ultimate position was visible, emphasizing the need for AMR reorientation.

Figure (b) shows a top view of how much the AMR’s position has deviated from its intended location.

Figure (c) indicates how the AMR re-aligned itself, adjusting its placement and orientation with the use of object tracking pipeline

Use Case:

- AMRs are often tasked with transportation and delivering goods. As the robot navigates, it might encounter changes in the environment such as new obstacles, changes in the layout. Reorientation allows the AMR to re-align itself, optimize its path, and continue its task efficiently.

- When a manipulator arm is placed on top of the AMR and the task is to pick a specific object it detected, it will move towards the object and position or orient itself at a specific location so that the arm can pick the object. At that time, this reorientation is useful.

- When the battery runs low, AMRs navigate to a charging station on their own. The Lidar and RGB cameras, paired with AI, ensure precise alignment with the docking ports. This maximizes uptime by automated battery charging without human intervention.

Conclusion:

The integration of AI and robotics technologies showcases the impressive capabilities of AMRs in tracking objects and precisely positioning them at target locations. Through advanced AI-driven reorientation, AMRs achieve accurate alignment, adapt to dynamic environments, and optimize task efficiency. These innovations highlight how AI-powered reorientation can transform industries by automating complex processes and driving significant advancements in operational efficiency.

Know More: Robotic Process Automation

References:

- Joseph Redmon, Santosh Divvala, Ross Girshick, Ali Farhadi, “You Only Look Once: Unified, Real-Time Object Detection”, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 779-788.

- https://docs.nav2.org/

- https://en.wikipedia.org/wiki/Lidar

- https://www.intel.com/content/www/us/en/architecture-and-technology/realsense-overview.html