Containers have brought about a revolutionary change in the development, deployment, and management of software applications. This overview will delve into the concept of containers, the state of things before containerization, and the immense significance and advantages that come with it in the software development world.

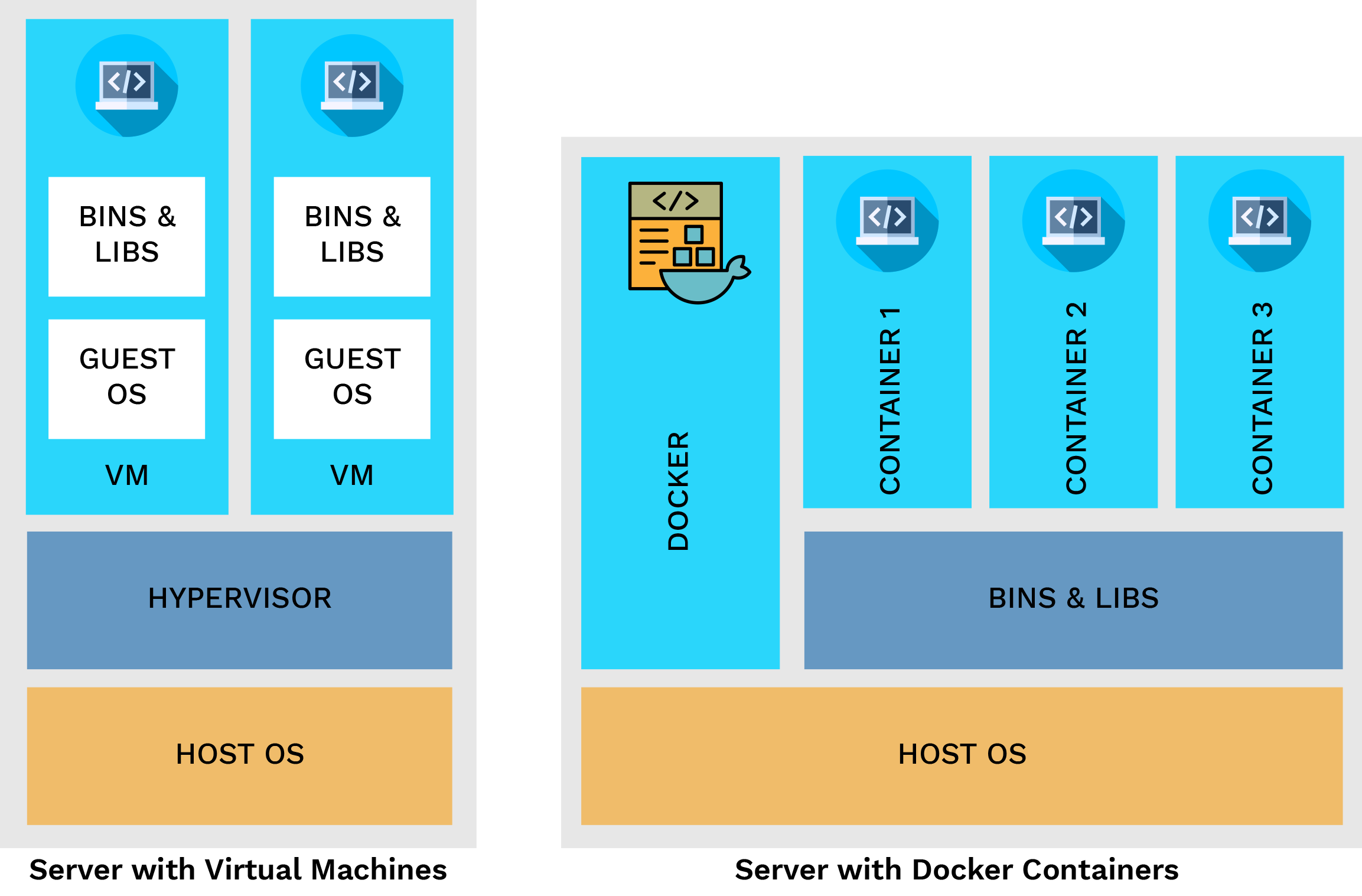

Containers offer a means of creating a segregated environment, also known as a sandbox, where applications and their dependencies can exist. The objectives of containers and virtual machines (VMs) are similar, as they both aim to isolate applications and their dependencies into self-contained units that can function anywhere. However, containers have a much smaller size compared to VM images and have the capability to run an entire web application or service.

Additionally, the usage of virtual machines (VMs) and containers replaces the requirement for real hardware, which leads to a more economical and energy-efficient use of computing resources.

A world before Containerization

In the early days of software development, applications were often monolithic, with all components tightly integrated into a single codebase. Deployment involved manually configuring servers and ensuring that each component worked seamlessly together. This approach, while simple in concept, became increasingly inconvenient as applications grew in complexity.

The advent of virtualization had a significant impact. Virtual machines introduced a level of abstraction between the application and the hardware it runs on. Developers could now create isolated environments, known as VMs, allowing for better resource utilization and more straightforward deployment. However, VMs still carried the overhead of running full operating systems, leading to higher resource consumption.

VMs were achieved by a technology called Hypervisor, also known as a virtual machine monitor (VMM), is a type of software, firmware, or hardware that creates and runs virtual machines (VMs). It enables a single host computer to accommodate numerous guest VMs by virtually allocating its resources, including memory and processing power.

Image Source: virtasant.com

While VMs offered improved isolation and flexibility, the resource overhead remained a concern. Running multiple VMs on a single physical server required substantial computational resources. This inefficiency led to increased infrastructure costs and limited the scalability benefits that virtualization promised.

While the concept of containers traces back to the 1970s, it wasn’t until Docker’s inception in 2013 that they gained widespread acceptance. The popularity of containers skyrocketed after Docker was introduced in 2013.

The popularity of container technology significantly increased in 2017 as various companies, including Pivotal, Rancher, AWS, and Docker, shifted their focus to adopt the open-source Kubernetes container scheduler and orchestration tool. This solidified Kubernetes’ role as the go-to technology for container orchestration.

In April 2017, Microsoft enabled organizations to run Linux containers on Windows Server. This was a major development from Microsoft that wanted to containerize applications and stay compatible with their existing systems.

Importance and Benefits of Containerization

Containerization has a lot to offer developers and development teams who have to write apps that work on different platforms or in different deployment scenarios, like the cloud or on-premises. Among these advantages are:

- Portability. A container allows a program to be reliably deployed across any platform or operating system as it contains all the necessary components for it to execute and be separated from the host operating system. Because of this, containers are far more portable than virtual computers.

- Agility. Because developers can leverage their familiar tools and, largely owing to Docker’s universal packaging method, maintain their Agile methodologies and DevOps workflows, they can accelerate application development and deployment.

- Speed. As containers do not own a complete operating system and leveraging the host machine’s OS kernel, incur lower resource overhead compared to virtual machines. Consequently, this enhances server efficiency and accelerates startup times, eliminating the need to boot an additional operating system.

- Fault tolerance. Every container that uses containerization is isolated from the others and runs on its own. This ensures that if one container experiences a failure, it doesn’t impact the operation of other containers. As a result, developers can detect and resolve issues earlier, while the unaffected containers continue to function, ultimately minimizing downtime.

- Efficiency. As previously stated, the operating system kernel of the host machine is shared by containers. Because of this, containers not only start up faster than virtual machines but also require a lot less space and resources. This lowers the cost by enabling the use of several containers on a single server or cloud instance. Moreover, it provides better scalability than virtual machines.

- Security. Containers benefit from enhanced security due to their isolation from one another, preventing malicious code in one container from impacting others or the host system. Developers can further bolster security by defining precise permissions, restricting communication with unnecessary resources, and automatically blocking unwanted components from infiltrating containers.

- Ease of management. Through automation, a container orchestration platform like Kubernetes makes the installation, scaling, and administration of containerized workloads, applications, and services more efficient. This simplifies the management, monitoring, updating, and troubleshooting of container-based applications significantly.

Docker

- Overview of Docker

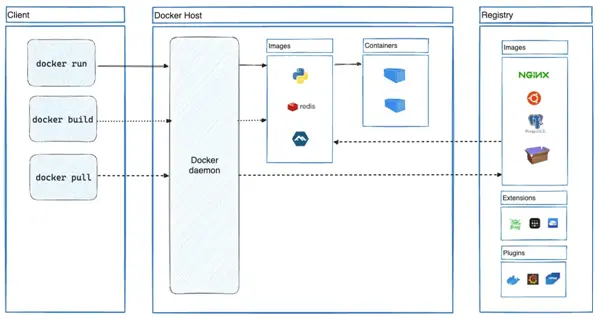

Docker is an OS virtualized software platform that allows developers to easily create, deploy, and run applications in Docker containers, which have all the dependencies within them.

It enables the separation of applications from the infrastructure, allowing for quick software delivery and management of infrastructure in the same way as applications.

- Key components of Docker

Image Source: docker.com

- Docker Engine: The central component of the Docker system, consisting of the Docker daemon, REST API, and Command Line Interface (CLI)

- Docker Images: Read-only templates used to build Docker containers.

- Docker Container: A software unit that packages up code and all its dependencies, so the application runs quickly and reliably from one computing environment to another.

- Docker file: A text document detailing all the commands a user can invoke on the command line to assemble an image is typically referred to as a “Dockerfile” This file contains a series of instructions that Docker uses to automatically build an image.

- Docker Registry: serves as a central repository for Docker images, providing a platform for developers to store, distribute, and collaborate on containerized applications.

- Docker Volumes: A way to persist data generated by and used by Docker containers.

- Docker Compose: a multi-container Docker application definition and management tool.

.NET Framework and .NET Core

- Overview of .NET Framework

The .NET Framework enables the creation and execution of Windows applications and web services.

The .NET Framework can be used to develop console apps, Windows GUI apps, Windows Presentation Foundation (WPF) apps, ASP.NET apps, Windows services, service-oriented apps using Windows Communication Foundation (WCF), and workflow-enabled apps using Windows Workflow Foundation (WF).

- Overview of .NET Core

Dot Net Core (.NET Core) is a major implementation of .NET which is open-source, cross-platform, and available for free. Dot NET Core runs on Windows, macOS, and Linux systems.

.NET Core facilitates the development of a wide range of applications, including gaming, machine learning, cloud, mobile, desktop, and IoT apps. It is written from scratch to be modular, lightweight, fast, and cross-platform. .NET Core has CLI tools for both development and continuous integration and it supports flexible deployment.

- Difference between .NET Core and .NET Framework

Architecture and Cross-Platform Compatibility:

.NET Framework: Traditionally, .NET Framework was designed for Windows and tied to the Windows operating system. It had limited cross-platform compatibility.

.NET Core: It was developed with a focus on being cross-platform from the beginning. .NET Core applications can run on Linux, Windows, and Mac Operating system, making it more versatile.

Deployment and Packaging:

.NET Framework: Applications built on .NET Framework are typically deployed with the full framework runtime, which can make them larger and less portable.

.NET Core: .NET Core introduced the concept of self-contained deployments, where the application and its dependencies can be packaged together. This results in smaller and more efficient deployment packages, which is crucial in containerized environments like Docker.

Containerization and Docker Support:

.NET Framework: While it is possible to run .NET Framework applications in Docker containers, it involves creating Windows-based containers. This can lead to larger container sizes and potentially limits the benefits of containerization.

.NET Core: Docker and.NET Core are designed with microservices and containerization in mind. The lightweight nature of.NET Core apps makes them ideal for microservices architectures, and it is easily containerized.

Future Development:

.NET Framework: Microsoft has shifted its focus to .NET 5 and beyond. While .NET Framework is still supported, new features and improvements are being introduced in the unified platform, which combines elements of .NET Framework and .NET Core.

.NET Core: Evolved into .NET 5 and its successive iterations, the objective is to bring the finest features of both .NET Framework and .NET Core.

Dockerizing .NET Applications

- Why Dockerize .NET Applications

There are several benefits Dockerizing .NET applications as it allows developers to create isolated test environments, ensures consistency between development and production environments, and enables cross-platform compatibility.

Docker also enables scalability by allowing you to create multiple containers of the same application, each running on a different machine, and load-balancing traffic between them, thus Dockerizing .NET application helps achieve scalability.

Docker and Kubernetes can optimize the cost of hosting by providing free and open-source containers, lower management and configuration costs, lower infrastructure costs, and better teamwork between developers and operations teams. Docker containers can be packed more densely on their host hardware, start and stop faster, and use significantly less memory than virtual machines—all of which translate into lower IT expenses.

- Steps to Dockerize a .NET Application

- First off, we need a .Net Web app. Using dotnet CLI we can execute command:

dotnet new webapp -o demoeiapp

This will create a web app named demoeiapp.

- Create a Dockerfile in the root folder.

In content, we need to define an image we want to base it on. We also need to choose a working directory inside the container where we want our files to go.

FROM mcr.microsoft.com/dotnet/core/sdk:2.2 AS buildenv

WORKDIR /app

we need to copy the project file ending in .csproj. To make sure we install all required dependencies, we also need to run dotnet restore.

- Restore packages.

COPY *.csproj ./

RUN dotnet restore

- We need to publish an app to generate a build from it.

COPY . ./

RUN dotnet publish -c Release -o out

- Next, we need to use .net runtime to run applications.

FROM mcr.microsoft.com/dotnet/core/aspnet:2.2

WORKDIR /app

COPY –from=buildenv /app/out .

ENTRYPOINT [“dotnet”, ” demoeiapp.dll”]

- Next, we need to build an image based on above instructions.

docker build -t demoeiapp .

- Once build is generated, Next, we need to run a container from the image to run an application.

docker run -d -p 8080:80 –name myapp demoeiapp

Choosing Between .NET and .NET Framework for Docker Containers

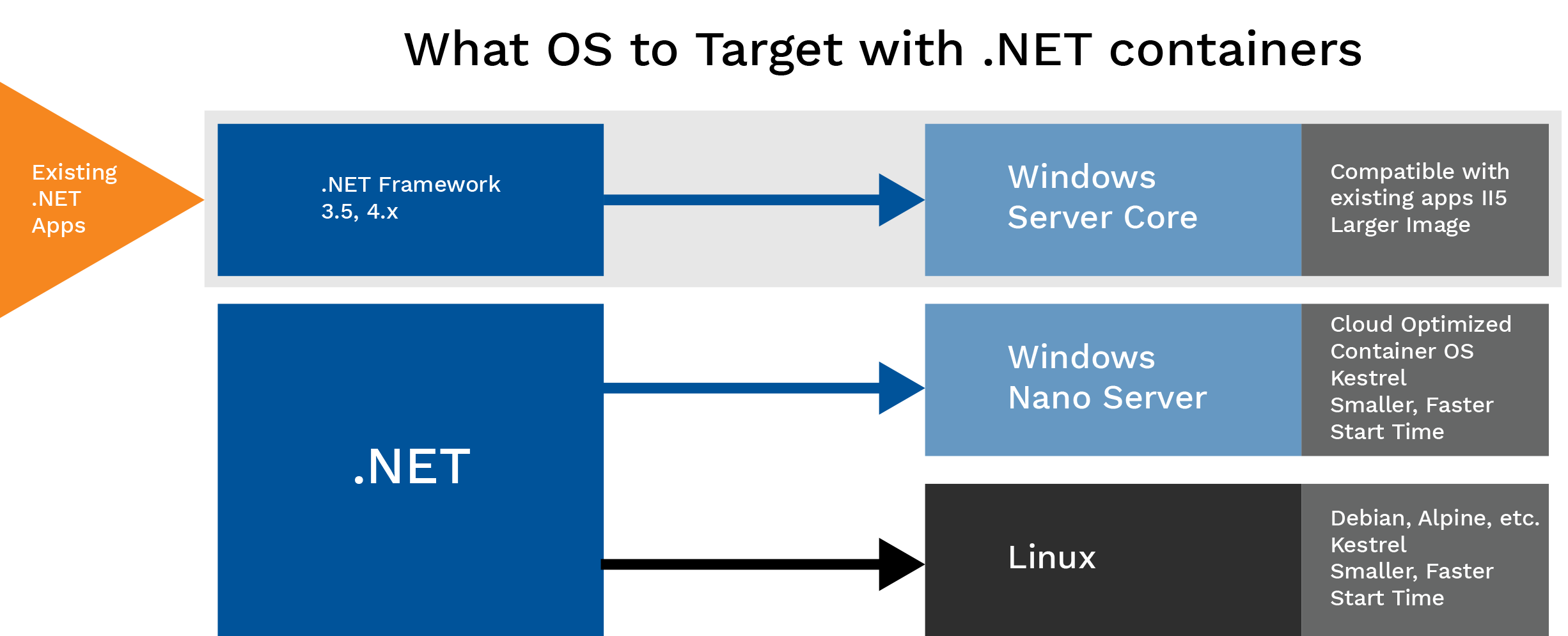

Because the.NET Framework only supports Windows, the best option if you want your application (web app or service) to operate on several platforms (Linux and Windows) that Docker supports is.NET 7.

When you deploy and launch a container, the image size is significantly smaller with .NET 7 compared to .NET Framework. However, unlike.NET 7, which can employ lighter Windows Nano Server or Linux images, you must start with the heavier Windows Server Core image if you wish to use the.NET Framework for a container.

Image Source: learn.microsoft.com

- When to choose .NET Framework for docker containers

- Migrating existing applications directly to a Windows Server container

- Using third-party .NET libraries or NuGet packages not available for .NET 7

- Using .NET technologies not available for .NET 7

- Using a platform or API that doesn’t support .NET 7

- When to choose .NET for docker containers

Further Steps

- Building Microservices

Transitioning from monolithic architectures to microservices offers a transformative approach, breaking complex applications into smaller, independently deployable services. This shift fosters agility, enabling rapid iteration and feature delivery. Microservices also enhance scalability, allowing specific components to scale based on demand, optimizing resource usage. Moreover, they bolster resilience by isolating failures to specific services, preventing widespread system disruptions. This approach fosters a culture of innovation, empowering teams to experiment with new technologies without compromising overall system integrity. Ultimately, embracing microservices drives continuous innovation and competitive advantage in today’s dynamic business environment.

Creating microservices means breaking large systems into smaller, easier-to-handle services that talk to each other using APIs. Microservices architecture provides improved scalability, flexibility, and maintenance simplicity.

- Container Orchestration

Container orchestration is the automation of much of the operational effort required to run containerized workloads and services. Software teams may use it to handle a multitude of tasks related to scheduling, deploying, scaling, load balancing, availability, and networking in containers.

Conclusion

Containerization has emerged as a transformative force in modern software development, offering a paradigm shift from traditional deployment models. Through the use of lightweight, portable containers to encapsulate programs and their dependencies, developers may improve consistency, scalability, and efficiency in a variety of computing environments. A complete ecosystem of tools and components that ease the container lifecycle, from development and testing to deployment and scaling, is offered by Docker, a top containerization platform.

Furthermore, the choice between .NET Framework and .NET Core for Docker containers underscores the importance of aligning technology with project requirements and objectives. While .NET Framework may be suitable for legacy applications with dependencies on Windows-specific features, .NET Core offers advantages in terms of performance, cross-platform compatibility, and support for modern cloud-native architectures. By Dockerizing .NET applications and embracing container orchestration practices, organizations can unlock new levels of agility, resilience, and scalability, empowering them to deliver innovative solutions and stay ahead in today’s dynamic digital landscape.