Introduction

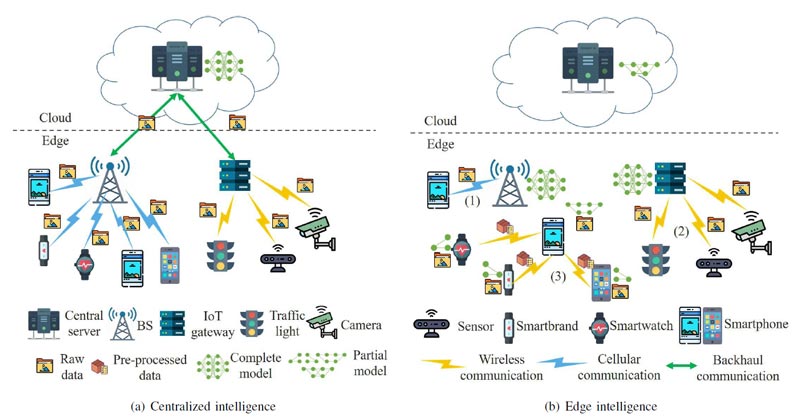

Everything is getting smarter these days from watches to cars, agriculture to industries, stores to cities. The increased connectivity has led to the rise of smart devices, which are also called “connected things”. During the 1960s, all focus was on improving computing power in a single device. Then around the 1980s, connecting multiple dumb terminals to a mainframe lead to the beginning of distributed computing. For the last decade, the trend has been primarily towards centralized processing of data, with help of cloud computing. Though cloud computing has been driving ubiquitous computing, the centralized workflow of capturing, storing, and processing data on the cloud is not enough for new emerging requirements and workload.

“IoT” has been the buzzword for years but past decade we have seen its increased adoption by businesses. As per Gartner, connected endpoints for IoT has nearly reached 5.8 billion in 2020. This tremendous rise of the Internet of Things (IoT) and its increased adoption is pushing computing back to the ‘edge’ of local networks and intelligent devices.

In traditional computing and even in cloud computing, the actual processing of data occurs far away from the source. Emerging applications, services, and workloads increasingly demand a different type of architecture where one can easily scale it to distributed infrastructure. Emerging requirements for availing cloud capability at remote sites are needed to support both today’s requirements (retail data analytics, network services) and tomorrow’s innovations (smart cars, cities, AR/VR). Cloud computing now requires an extension across multiple sites and platforms to cope with evolving demands.

What is edge intelligence?

There is a very thin line between edge analytics and edge intelligence. Edge analytics can be defined as a process of data collection and performing analysis on it, all taking place close to the edge device. This processed data is then sent to the cloud for further analytics.

Edge intelligence is a step ahead of edge analytics where you perform actions after analysis at the edge itself involving Artificial Intelligence. This deviates from cloud analytics and cloud intelligence where we send all this data over the network to the centralized data store and perform analysis and decisions.

For your reference, the above picture refers to the workflow between edge analytics and edge intelligence. An intelligent edge device will have an optional flow of storing meta-data. This meta-data is nothing but a crucial process data/report that is sent to the cloud for evaluating the performance of different edge devices. This data is also vital to analyze the actions of edge devices and is helpful in making further decisions like re-training of edge models for better accuracy, re-training of models using additional data, etc.

Why Edge Analytics had to evolve to Edge Intelligence?

Edge Analytics truly enabled connected devices to perform complex event processing at their end leading to real time analytics, but businesses wanted more out of it.

An extract from a paper by HPE Edgeline & NVidia:

“Thirty billion images a second; one hundred trillion images an hour!

That is how much content will be captured in 2020 by surveillance cameras across the globe. These one billion cameras, twice today’s number, will be at traffic intersections, transit stations, and other public areas, helping to make our cities safer and smarter. An essential part of any Smart City or AI City1 initiative, they’ll also be in retail stores, service centers, warehouses, and more, ensuring safety and security, gathering information to boost sales, track inventory, and improve service.”

This is just a glimpse of what is coming to us and is coming at a fast pace. This is just an extract of data that would be coming from cameras across the globe. The number is staggering when we consider other connected devices like mobile phones and other sensors data too across the globe. Hence, this has pushed towards the evolution of edge analytics to edge intelligence.

Edge analytics needs to evolve because

- The amount of data generated by edge devices is going to increase YoY, so they must be enabled to do more than just CPE or analytics.

- Since data is huge, edge devices must have the intelligence to work autonomously even with bare minimum connection to the cloud.

- Edge devices are now being empowered with very fast microprocessors, good storage capacities, fast and generic I/O interfaces.

- Edge devices can easily discover, connect, and communicate with other edge devices in the vicinity.

The evolution of GPUs has given tremendous computing power to edge devices thus facilitating the shift from edge analytics to edge intelligence even smoother. With the transition from connected devices to intelligent devices, there is a push to bring AI processing from the cloud to the devices thus making them an intelligent edge.

Benefits of Edge Intelligence

- Intelligence at low latency

- Cloud computing or centralized systems have been suffering from problem of latency. Capturing and sending data to a central location, processing and responding back takes some time and does not help in near real time decision making.

- The core advantage of edge intelligence is reduction in latency, thus performing close to real-time actionable events and thereby increasing the performance of the entire system. For example, it lets you identify a vacant parking spot through camera video feeds and updates the vacancy table without streaming all feeds to the cloud, central server to do video processing, and waiting for its inputs. The overall intelligence of identifying vehicle, parking spot, and where the vehicle is located is performed at the edge device itself.

- This also frees cloud and centralized systems from processing raw and irrelevant data and they can work on highly structured, context-rich actionable data. This way we improve latency not just at the edge but the overall system.

- Data storage at low bandwidth

- In any IoT model, the bandwidth requirements are very high to transmit all the data collected by thousands of edge devices. It will also grow exponentially with the increase in the number of these devices. Remote site locations may not even have the bandwidth to transmit data and analysis from the cloud server back and forth. Edge Intelligence helps perform analysis and take the required actions. It can store data, metadata, and action reports which can be later collected.

- Linear Scalability

- Edge Intelligence architectures can linearly scale as IoT deployments grow. Edge intelligence architectures utilize compute power of deployed devices. It can do the heavy loading of executing deep learning and machine learning models. This reduces pressure on centralized cloud systems as edge devices share the major burden of performing intelligent functions.

- Reduced Operational Costs

- Since intelligent edges act upon time-sensitive data locally, you save a considerable amount of cloud space as they provide central systems with content-rich data. This reduces your operational costs.

- Edge intelligence drives actions in real-time for all connected IoT devices, this gives OT professionals to deploy and maintain devices more efficiently.

Components in Edge Intelligence

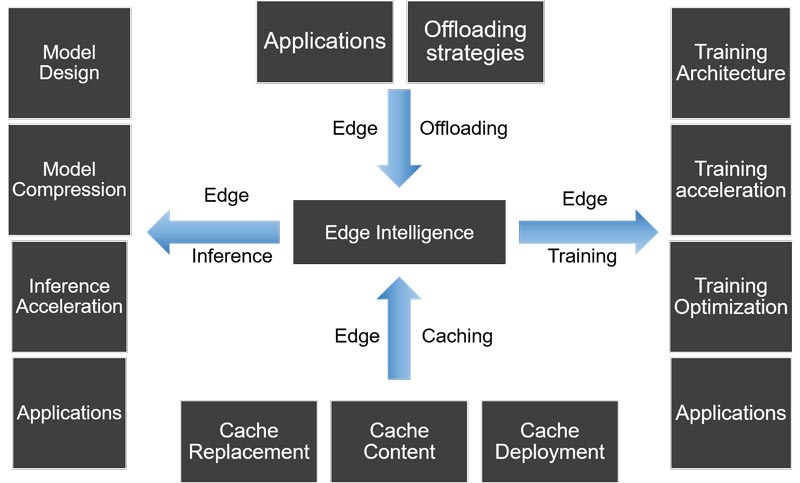

Recent researches on edge intelligence have to the identification of 4 main components in edge intelligence architecture: edge caching, edge training, edge inference, and edge offloading. Let’s go through one-by-one of them in detail.

Edge Caching

Under edge Intelligence, edge caching primarily takes care of the incoming distributed data coming towards your edge devices from end-users and its surrounding environment. Along with this data, the data generated by edge devices itself fall under edge caching. For example, the data of cellphone that is generated is stored in the same device. Also, the sensors with mobile (accelerometer, gyroscope, and magnetometer) gather environment data which is processed and stored at reasonable places and is used by artificial intelligence algorithms to provide services to users.

This module takes care of complete edge data store management. While implementing this you answer the below questions:

What data to cache? Where to cache? and how to cache?.

Let’s see how effective caching strategies would work for edge intelligence. For our first question consider continuous vision systems like CCTV, surveillance cameras incoming frames would have a large amount of similar pixel data. Devices need to send videos and frames to the cloud as a result of some actions taken. For AI-based applications like people/object recognition deployed on edge, a vision edge device can just capture result frames only and send it to the cloud server for further processing thereby reducing sending complete videos. For tracking based scenarios, you can cache resultant reference frames for your video processing. Doing so with effective caching, one can just save the different pixel data instead of all resultant frames. For the repeated part, edge devices could reuse the results to avoid unnecessary computation. Effective identification of patterns of redundancy for in-coming data can help speed up computation and accelerate inference.

For the second problem, where to cache, the existing research work mainly focuses on 3 locations for cache deployment they are edge devices, micro BS (local routers, hubs), macro BS (large network routers covering a large area, on-premises). The deployments will be subjective to the problem statement one want to solve using edge intelligence.

For example, assume there is an application that recognizes if a person entering a premises has his face covered or not. An AI model to identify covered faces is best suited to be deployed on the edge camera itself. This brings actionable alerts closest to the point of data capture, faster processing, and results. If the same applications need to be scaled to premises, along with the model being deployed at every camera, you will also need to cache information at routers, the hub for all edges which provides you more content-rich information for face detection in premises.

And lastly for our last question, “how to cache?”, remember that storage capacities of edge devices are limited hence effective cache replacement strategies must be implemented. Select a replacement policy that would increase service quality. Most researchers adopt collaborative caching amongst edge devices, especially in the network with dense users. They usually formulate the caching problem into an optimization problem on the content replacement strategies, data association policy, and data incentive mechanisms.

Edge Training

Till date, training of AI models that are to be deployed on intelligent edge is mostly centralized. We train a deep learning model on powerful central servers coupled with powerful GPUs, and port them to edge devices using their compatible edge SDK and runtime environment. This is still the best way to control re-training and deployment of models to edge devices using cloud connectivity or other IO interfaces. But for a true edge intelligence architecture we need to realize edge training.

Edge training refers to the process of learning optimal values for weight and biases for model deployed on data or identifying hidden pattern on the training data captured at edge. For example, Google develops an intelligent input application, named G-board, which learns user’s input habits with the user’s input history and provides more precise prediction on the user’s next input. Traditional centralized training weigh more on powerful servers and computing clusters, compared to which edge devices and edge clusters are not powerful enough. Hence, in addition to the problem of training set (caching), the other key problems we have for edge training are:

(i) how to train (the training architecture), (ii) how to make the training faster (acceleration), (iii) how to optimize the training procedure (optimization), and (iv) how to estimate the uncertainty of the model output (uncertainty estimates).

To address our first problem we have two approaches: Solo training and collaborative training. In solo training, we perform training of model on single edge device, whereas collaborative training means multiple edge devices synchronize together to train a common model. Solo training has higher hardware requirements and very heavy edge devices with more compute power, power consumption are ideal.

Solo training might not always be realistic when requirements are for low power and bandwidth devices. Collaborative training addresses this problem, but since it requires co-operation of multiple devices we require good strategy for periodic communication and updating. The frequency of updates and costs are two factors that can affect performance of communication and training. Research is high on maintaining high grade edge training performance keeping lower update frequency. Since collaborative training works in network, it’s susceptible to malicious attacks. Also the problem of evaluating the confidence/performance of edge trained model is difficult in collaborative training, hence uncertainties creep into model performance. Research is on for addressing this area of edge-training.

Edge Inference

Edge Inference is process of evaluating performance of your trained model or algorithm on test dataset by computing the outputs on edge device. For example, developers build a deep learning based face verification application. The model is built and trained on power CPUs and GPUs that give you good performance results, like processing a face image within a second. But once deployed on edge devices, will you achieve the same performance?

Hence, the critical problems of employing edge inference are:

(i) How to make models applicable for their deployment on edge devices or servers (design new models, or compress existing models), and (ii) how to accelerate edge inference to provide real-time responses. Algorithm porting also plays a role in edge inference where you make existing algorithm compatible to the platform or technology stack of edge device on which it needs to be deployed.

There has been significant progress in edge inferencing model stack, thanks to the competitive markets that drive edge based GPU technologies. The problem of how to make model that work best in edge environment is mostly solved. There has been rise in lightweight AI architectures like SqueezeNet, MobileNet, Xception, ShuffleNet, CondenseNet etc and are still evolving. These architectures naturally suit edge environments, and are compressions of their counterpart GPU based models to reduce unnecessary operation during inference. Their performance is still subjective when compared to their counterparts performance on GPU, but research is still on to focus on compressing existing models to obtain thinner and smaller models, which are more computation- and energy-efficient with negligible or even no loss on accuracy. There are five commonly used approaches on model compression: low-rank approximation, knowledge distillation, compact layer design, network pruning, and parameter quantization, which we won’t be covering in this paper.

Similar to edge training, edge inference is slower compared to centralized architecture. Edge inference can be accelerated using both hardware and software accelerators. Hardware acceleration mostly focuses on providing more parallel computing on devices like CPU, GPU, DSP etc. Software based acceleration focus on optimizing resource management, pipeline design, and compilers, based on compressed models.

Edge Offloading

Edge offloading is another important feature in edge intelligence where an edge device can off-load some of its tasks like edge-training, edge caching or edge inference to other edge devices in network. It similar to distributed computing paradigm, where edge devices create an intelligent eco-system. Edge offloading is an abstract service layer above the other three components and is utmost important as it gives a fail-safe strategy for overcoming shortages by independent edge devices. A structured and effective implementation will give edge intelligence full play to available resources in edge environment.

Research focuses on 4 strategies for edge offloading.

- Device to Cloud offloadingThis strategy prefers to do pre-processing and light weight tasks on edge devices and offload the rest to a cloud server, which could significantly reduce the amount of uploaded data and latency. It is a conventional approach used in edge analytics and for edge devices with very low compute and storage power.

- Device to Server offloadingThis strategy prefers to reduce direct connectivity to cloud resource and offload major tasks to an on-premises server. The server then takes care of synchronization with cloud. Its good approach when edge devices need to create eco-system across a large area upto 1-2 km.

- Device to Device offloading:This strategy is ideal edge offloading strategy. For example, smart home scenarios, where IoT devices, smartwatches and smartphones collaboratively

- Hybrid offloading:This strategy can be thought of as a mix of all the above discussed offloading approaches. It can be the most adaptive technique which will make the best use of all available resources. Hybrid offloading can be secure and fail-safe option.

Challenges for Edge Intelligence

The grand challenges facing AI on Edge are mostly around data availability, model selection and co-ordination mechanisms.

Data Availability

It is a tough challenge for edge devices to itself process raw data directly for edge training because usability of data is important. Captured raw data cannot be directly used for model training and inferencing plus data coming from edge devices can have obvious bias which can affect the learning performance. Federated learning can solve the problem to some extent but still synchronization of training procedures across devices and communications is a challenge.

Model Selection

At present, the selection of AI models faces severe challenges in aspects, across from the models themselves to the training frameworks and hardware. No just selecting any light-weight AI models will work but selecting the befitting threshold of learning accuracy and scale of AI models for quick deployment and delivery is also important. Secondly, with limited resources at edge, the selection of probe training frameworks and accelerator architectures is crucial. Model selection is coupling with resource allocation and management, thus the problem is complicated and challenging.

Co-ordination mechanisms

Working with multiple heterogeneous edge devices, we are faced with different in computing power and communication protocols which poses challenge in terms of model adaptability and serviceability. Same methods may achieve different learning results from different clusters of devices. Establishing robust, flexible and secure synchronization between edge devices, servers and cloud at both hardware and software levels is utmost important. There is immense research opportunities on a uniform API/IO interface on edge learning for ubiquitous edge devices.

Conclusion

Edge Intelligence is still in its early stages, but the immense possibilities that it opens up have got researches and companies excited to study and use it. This paper tries to uncover the research opportunities in edge intelligence, compares edge analytics and edge intelligence and thus identify critical components in edge intelligence. We have also discussed benefits and edge intelligence and what challenges we still face to truly realize edge intelligence in its true essence. This paper hopes to enlighten the thoughts on emerging field of edge intelligence, and pave way for fruitful discussion and research in this domain.

eInfochips’ Artificial Intelligence & Machine Learning offerings help organizations build highly-customized solutions running on advanced machine learning algorithms. We also help companies integrate these algorithms with image & video analytics, as well as with emerging technologies such as augmented reality & virtual reality to deliver utmost customer satisfaction and gain a competitive edge over others. For more information, please contact us today.