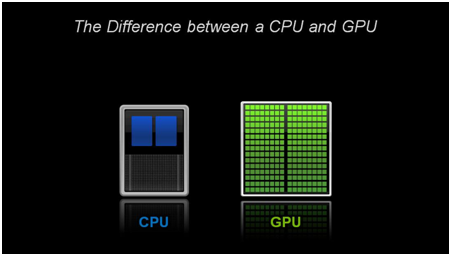

GPU has become the preferred choice for computation intensive tasks largely due to its efficiency and cost effectiveness.Generally referred as GPGPU (General Purpose GPU) it is increasingly playing its part in domains like Medical imaging, Bioinformatics, Computational Fluid Dynamics, Computational Finance, Seismic Exploration, Defense, and Artificial Intelligence.

While CPU has always been about speed, GPU is more about throughput. GPUs are clocked around 700-900MHz whereas typical CPUS are clocked at 2.5-3.0 GHz but where number of CPU cores are limited to 2,4 or 8 number of GPU cores ranges from 100s to 1000s and that’s where things become interesting.

Accelerating Applications:

Not everything can be ported to GPU. If application has huge computations then it makes sense to port on GPU, otherwise there is a chance that your program may run in fact slower on GPU compare to CPU. Hence, almost all engineers porting their first program tend to observe the slower performance on GPU over CPU.

Algorithm porting has 3 main phases – Profiling, Porting and Optimizing.

- Step 1: Profile different parts of code and identify hotspots.

- Step 2: Write CUDA code for the hotspots.

- Step 3: Compare timings and based on data and do code optimization.

It may go through anywhere between from two to six rounds of optimization to achieve good results. At the end of each optimization developer needs to make sure that the GPU output exactly matches the CPU.

There are 3 main factors involved in porting.

- Computation vs. Memory transfer

- Algorithm Reengineering

- System Architecture

One major aspect of porting application on GPU is memory transfer. First data needs to be copied from CPU to GPU, computation is performedon the GPU and finally output is transferred back to CPU. This two way memory transfer between CPU and GPU is one of the important factors in optimization. Each memory access in GPU takes several cycles to compare computation and hence GPU code should have minimum memory access. If possible, memory transfer and computation should be done in parallel. CUDA features such as Pinned memory, asynchronous memory transfer and execution, texture, constant and shared memory can be used to achieve it.

Following is the table of RGB Histogram calculation. RGB histogram has 3 memory accesses (R, G and B Channel) per pixel calculations which takes a toll on performance. Calculation part involves number increment but it is an atomic operation further slowing down the performance. These numbers might be not as high as other algorithms but it serves as a good example for understanding boundaries of GPU porting.

RGB Histogram calculations

| Image Resolutions | Time On Tesla K20C (ms) | Time On CPU i7 Quad Core @3.4 GHz(ms) | Performace Improvement |

| 360p (640 x 360) | 4 | 16 | 4X |

| 480p (854 x 480) | 4 | 26 | 6.5X |

| 540p (960 x 540) | 7 | 37 | 5.3X |

| 720p (1280 x 720) | 8 | 50 | 6.3X |

| 1080p (1920 x 1080) | 20 | 70 | 3.5X |

NVIDIA provides some excellent GPU ported libraries to do general calculation and image processing. First part of porting is drop in these GPU ported libraries but the real game starts when you start playing with the algorithm. In GPU, work is done in thousands of threads so it is must that problem is divisible into small identical units. This reengineering requires a very different approach to problem solving compared to CPU programing that is difficult to master unless by experience.

Majority of cases there is hardly any similarity between CPU and GPU code of same algorithm. Generally it is either single thread for each node or a tree like approach from bottom, where at each stage work is done and synced and transferred to the next stage. This is applicable where we know data size and pattern, but when both the variables are unknown dynamic parallelism should be used where algorithm will adapt to data size and speed to achieve optimization.Amdahl’s Law and Gustafson’s Law serves as a good tool to find out how much scaling can be achieved for a particular application.

Out team is working on porting image classifier on Tesla K20C. We could improve up to 71% compare to Xeon quad core clocked at 2.5 GHz just by replacing FFTW calls with cuFFT calls.

Generally it is known in advance on which system solution will be deployed. Though generic solution is possible that can run on any system but sometimes solution tuned for known GPU gives slight better edge in performance. Same algorithm running on two different GPUs may give very different performance. Life is not fair hence one cannot expect constant increase in performance with constant increase in number of resources. There is always a sweet spot after which even increase in resources starts decreasing the output. Solution has to be designed keeping in mind number of cores, memory bandwidth and input data rate to gain maximum performance.

We have ported some of the image processing algorithms on Tegra Tk1 and Tesla K20C. Following are the numbers. As you can clearly see Tesla numbers are very exceptional.

Performance for full HD (1920 X 1080) Image processing (time in ms)

| Algorithms | ARM @ 2.3 GHz Quad Core | TegraTK1 GPU | Performace improvement | Intel Xeon @ 2.50GHz Quad Core | Tesla K20C | Performance improvement |

| Gaussian Filter | 307 | 23 | 13X | 150 | 1.8 | 83X |

| Sobel Filter | 986 | 129 | 7X | 423 | 1.68 | 250X |

| Generalized Hough Transform | 440 | 190 | 2.3X | 242 | 11 | 22X |

| Object Detection | 215.8 | 54.5 | 4X | 109.5 | 5.7 | 19X |

The Road Ahead The Future of GPUs

Things haven’t looked back for GPGPU since 2006, when NVIDIA unveiled CUDA(Compute Unified Device Architecture) as a parallel computing platform and programming model to achieve General purpose computing on GPU. Also the consistently evolving architectures such as Tesla, Fermi, Kepler, Maxwell and Voltahave made GPU computing faster and efficient.

NVIDIA future plan includes on-package stacked DRAM, 3D memory and NVLink. NVLink is NVIDIA’s answer to memory transfer bottleneck in PCIe bus. It will be 5 to 12 times faster in 3rd generation PCIe allowing data transfers speed of 80 and 200 GB/s between CPU and GPU.

NVIDIA’s progress in GPGPU’s is testimony to the fact that there are varied and thousands of new areas where GPU can be deployed and at eInfochips we extend it to engineer products for domains like Oil and Gas exploration, Medical Imaging, Bioinformatics and Artificial Intelligence.

The work we have done in GPU space has immense applicability in Oil-n-Gas, Medical Imaging (for CT Scan, X-Ray, Ultra-Sound and Endoscopy), Avionics, Media and Broadcast and Molecular & Genetic Research.

PS: If you are visiting SC14 at New Orleans, LA, meet us so that we can broaden the scope of discussion from the above post. Write us to marketing@einfochips.com for setting up a meeting.