While designing a system it is important that we consider each aspect of the echo system whether it is the hardware considerations or the software, data, and mechanisms to store the data. While designing a system a futuristic approach that considers the potential growth and seamless operations is needed.

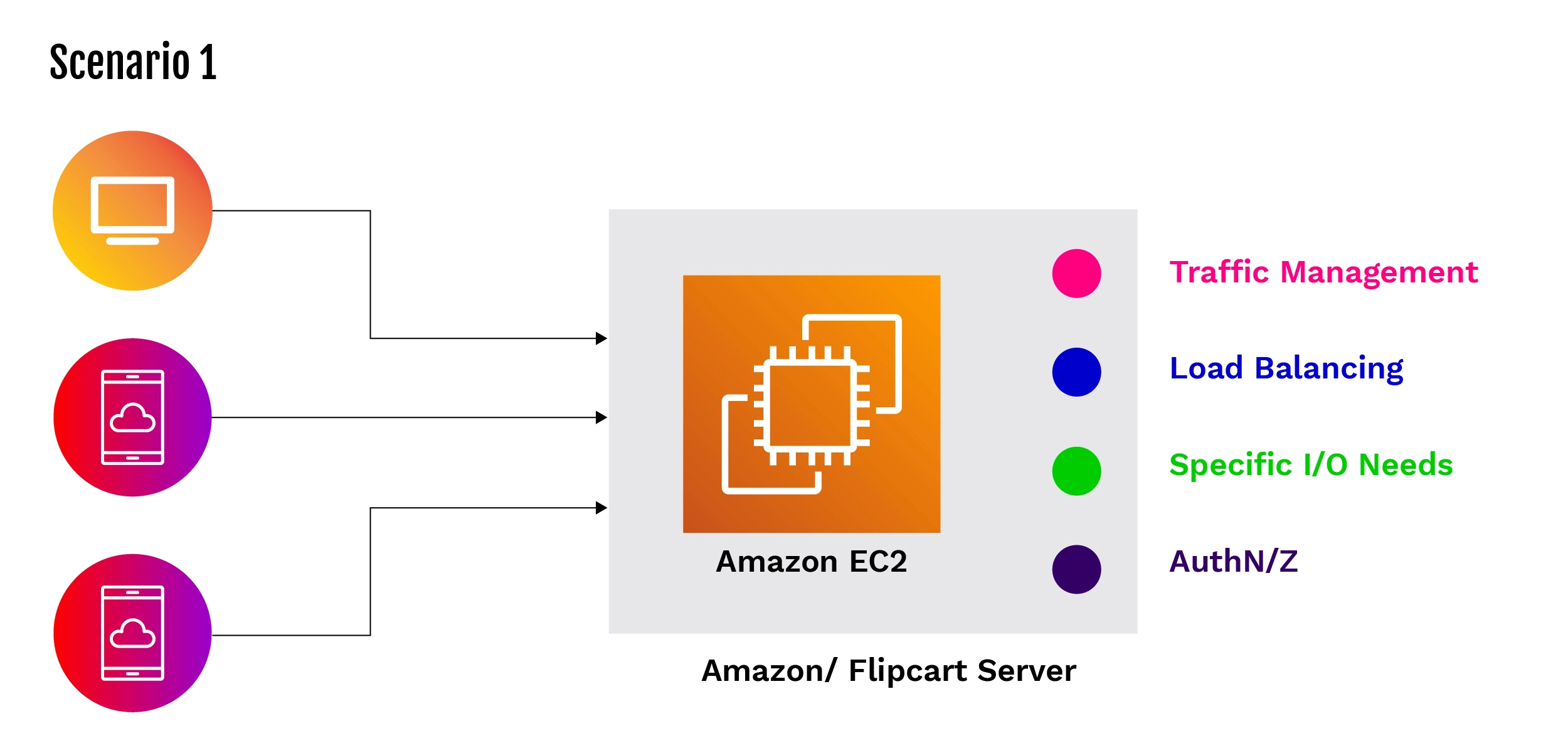

A scalable system has a backend server, UI(Browser) and mobile applications. Let us consider a system of ecommerce applications like Amazon or Flipkart. Let us consider a simple system and try to understand the constraints, ways to overcome them and design a robust system accordingly.

In the above scenario, users use applications from web browsers and mobile applications. There is no layer between the client(user) and the backend server where the different microservices run. This means that each microservice is a public endpoint. This approach is called direct client-to-microservice architecture. It is ideal for small scale applications and where the client application is server-side web application. When a client application is a mobile application or SPA web application this approach will cause issues.

Handling incoming traffic handling, load balancing, specific input – output needs and authentication and authorization in code, need special attention. This creates overhead for the application.

In this scenario what is required is a separate entity that takes care of all the above-mentioned overheads and also caters to the security aspects. Such an entity is an API gateway; it takes care of traffic management, load balancing, specific input-output needs, authentication and authorization.

In the above scenario, we have introduced an orchestrator which will take care of the issues discussed in scenario 1, This orchestrator is an API gateway. In this approach the microservices are not exposed to public endpoints unlike in scenario 1. The API gateway is the single point of contact for clients to serve their requests. An API gateway intern sends requests to the respective microservices. In the second scenario there is a single API gateway for all users. When the user increases the request flow on the API gateway, it may cause issues.

In the third scenario, the requests are further distributed to the API gateway based on the client type. In this scenario, there are two API gateways, one for web browser-based applications and the other for mobile applications. This reduces the request load on the API gateways. Putting the API gateways in autoscaling group in a manner similar to the EC2s, makes it more reliable and robust. As the requests increase, the load increases on the API gateway. Another instance of the API gateway is spawned automatically to cater to the requests and it in turn reduces the load on the API gateway. This provides high availability of the system.

The API gateway also enables the request aggregation feature. This feature enables capabilities of aggregating multiple HTTP requests into a single request and sending it to respective microservices. It also aggregates responses from the individual microservices and sends them back to the client. In this example, the Amazon mobile app has multiple threads running like advertising, client information, cart information, etc. In such use cases, the client aggregates multiple requests into a single request, targeting multiple microservices and sends it to the API gateway. The API gateway decouples the request and sends multiple requests to the respective microservices. It then collects the responses from multiple microservices and aggregates it into a single response and sends it back to the client.

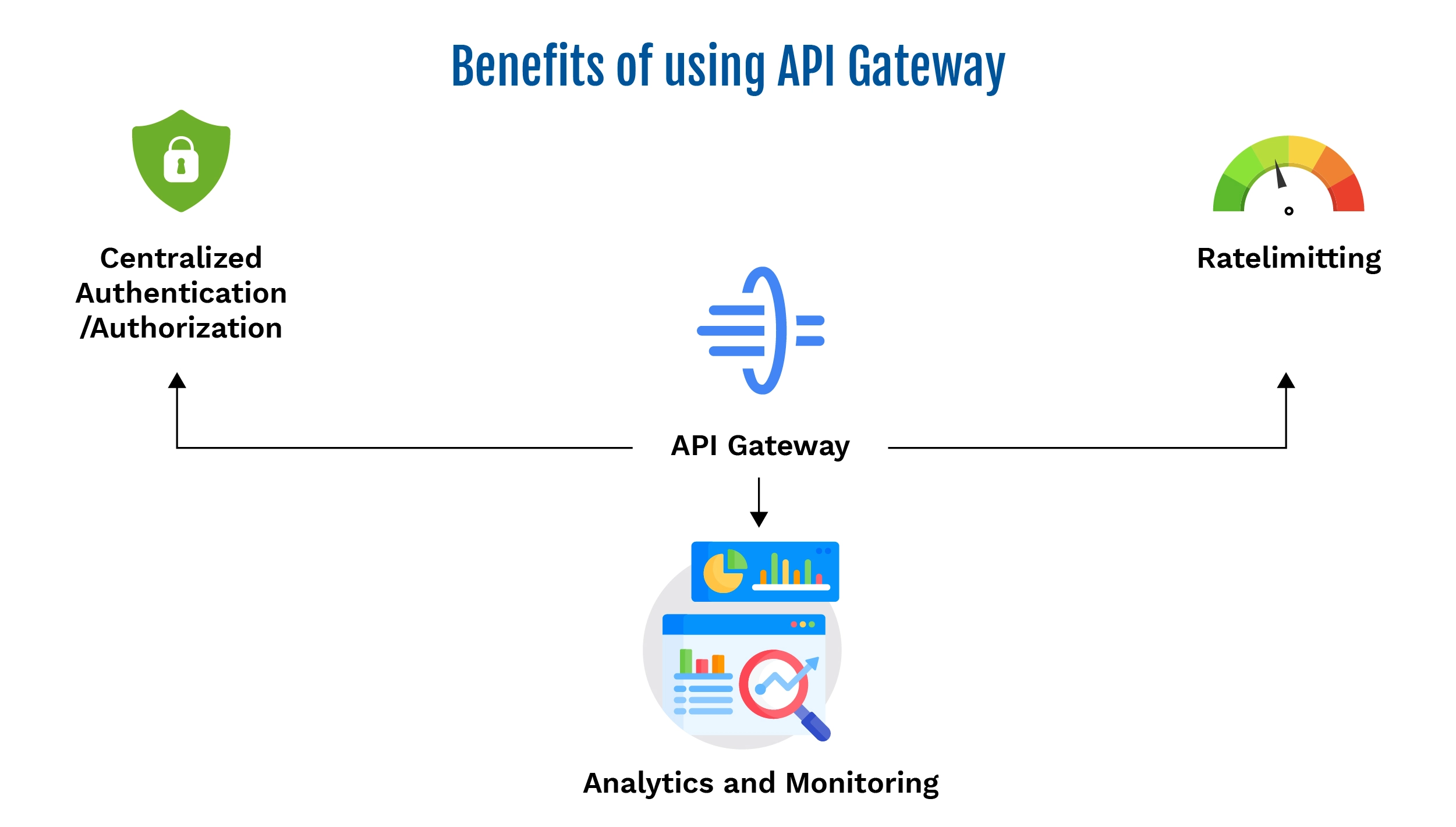

Benefits of using an API gateway

1. Centralized Authentication and Authorization:

One of the key advantages of an API gateway is that it handles authentication and authorization at a central point, ensuring that the backend services can focus solely on business logic. The gateway validates tokens, such as JWTs or OAuth tokens, on behalf of the backend services. By offloading security policies to the API gateway, services can avoid any redundant code, reduce maintenance efforts, and achieve consistent security across the board. This approach also makes updating or adjusting policies far easier, as they only need to be updated at the gateway rather than individually across services.

2. Rate Limiting and Throttling:

API gateways are also responsible for managing traffic flow to backend services by enforcing rate limiting and throttling rules. Rate limiting caps the number of requests a client can send in a given timeframe, which protects services from being overloaded or facing denial-of-service attacks. Throttling slows down request processing when a certain threshold is reached and helps distribute traffic smoothly. These controls allow systems to handle high volumes while ensuring stable and fair resource usage.

3. Analytics and Monitoring:

Another key benefit of an API gateway is its ability to provide insights into API usage and performance through built-in analytics. Metrics like request rates, error counts, response times, and data usage can be tracked and monitored, giving real-time visibility into how clients interact with the APIs. This visibility enables teams to catch potential issues early, optimize performance, and gain valuable information on user behavior, which aids in scaling and enhancing the system based on actual usage patterns.

4. Enhanced Developer Experience:

With an API gateway managing requests, developers have a more streamlined experience because they interact with a single access point rather than dealing directly with multiple backend services. By simplifying the API structure and providing a consistent interface to clients, the gateway abstracts the changes in backend services, allowing for seamless updates or additions to the system. This makes it easier to expand the system, add new services, and maintain a smooth client experience over time.

Implementation and Scalability Considerations

1. Auto-scaling and High Availability:

As traffic to the API gateway fluctuates, especially during peak times, it is essential to configure auto-scaling to dynamically adjust resources based on demand. By placing the API gateway in an auto-scaling group, new instances of the gateway can be spun up automatically when traffic spikes, distributing the load across multiple instances. This setup ensures that the gateway can handle sudden increases in traffic without affecting performance. Additionally, for high availability, API gateways should be deployed across multiple availability zones or regions, providing redundancy, and minimizing the risk of downtime due to regional outages.

2. Caching to Improve Performance:

Caching is an effective way to reduce response times and lighten the load on backend services. By caching frequently requested responses at the gateway level, repeated requests can be served directly by the gateway without needing to contact the backend. This can significantly reduce latency and enhance the user experience. Effective caching strategies, such as setting time-to-live (TTL) values, can be implemented to ensure that cached data remains up-to-date and relevant.

3. Distributed Logging and Monitoring:

A well-designed API gateway setup includes logging and monitoring tools to track requests, performance, and errors across the gateway infrastructure. Logging helps identify slow-performing APIs, track usage patterns, and detect anomalies that may indicate issues. With distributed logging and monitoring, one can aggregate logs from multiple gateway instances into a centralized system, enabling quick access to insights on system health and behavior. Tools like Prometheus, Grafana, and ELK Stack are commonly used to set up robust monitoring and logging for API gateways.

4. Security and Rate Limiting Configuration:

Scalability considerations must also include robust security measures to prevent unauthorized access and overload attacks. The API gateway should be configured with rate limiting to control the volume of requests per client. By setting quotas or throttling rules, one can prevent any single client from overwhelming the system and ensure fair access for all users. Additionally, implementing IP whitelisting or blacklisting and token-based authentication adds extra layers of security that scale with the number of users and devices accessing the services.

5. Service Discovery and Dynamic Routing:

As the microservices within the system grow, the API gateway must be able to dynamically route requests to the appropriate service endpoints. Integrating service discovery with the API gateway allows it to automatically update routing information when new services are added, or existing services are modified. This ensures that as the system scales, the gateway can efficiently route requests without manual configuration updates. Tools like Consul or Eureka can be integrated to handle dynamic service discovery.

Failover and Resiliency Configurations:

For a robust and scalable API gateway, it is essential to configure failover mechanisms to manage potential outages or failures in backend services. Circuit breakers, retries, and fallback methods are useful strategies. A circuit breaker pattern temporarily blocks requests to a failing service, preventing further strain on the system while allowing other services to continue functioning. Retries and fallbacks provide alternative paths, such as delivering a cached or generic response if a service is unavailable, improving the system’s overall resiliency.

6. Resource Allocation and Load Testing:

It is crucial to conduct regular load testing to understand the gateway’s limits under the various traffic scenarios. This testing will help identify bottlenecks and establish baseline metrics for memory, CPU, and bandwidth usage under heavy loads. Allocating sufficient resources, setting up proper configurations, and testing them regularly helps the API gateway remain responsive and prepared for future growth.

Conclusion:

An API gateway is a critical component in modern system architecture, especially when dealing with microservices. It simplifies communication between the clients and backend services while addressing important concerns like traffic management, security, and scalability. By centralizing functionalities such as authentication, rate limiting, request aggregation, and monitoring, the API gateway ensures that backend services remain focused on business logic, making the system more efficient and easier to maintain.

As the applications scale, implementing robust practices like auto-scaling, caching, and failover mechanisms further strengthens the gateway’s reliability and performance. The API gateway not only enhances the user experience with faster and more secure communication but also supports developers by providing flexibility and abstraction from backend complexities.

Incorporating an API gateway into the system design is not just a choice but a necessity for building scalable, secure, and future-ready applications.

Know More: DevOps Services