A computer vision solution refers to the use of cameras and computer vision algorithms to enable machines to perceive and understand their surroundings. It is a combination of the camera’s capability to capture and analyze visual data and make intelligent decisions based on that information.

Edge AI is a form of distributed computing where all computations occur outside of the cloud, or at the “edge” of a centralized server. This function of edge computing is supported by devices that can capture visual data and perform computations locally on the device, closer to the source of the data, making that data immediately actionable. Only selective data is stored in the cloud for data analytics and insights. When deploying edge-based AI solutions using a camera, the following are important considerations:

- Image and Video resolutions and quality parameters

- Image and video processing requirements

- Computation – CPU, GPU, DSP and AI engine for inferencing and decision making at edge

- Connectivity at the edge and to the Cloud

Edge AI solution flow includes: Capture-Process- Record – Analyze – Sustenance

The capture stage involves image sensor integration, characterization, and processing. This is the first step to obtain the right image/video, which will be processed further by subsequent subsystems. Once the capture process provides a good image/video as input, the process stage consists of post-processing blocks, encoding/transcoding, and streaming such data based on different use cases and applications. For example, streaming requirements for broadcasting equipment are different from those of healthcare, but latency is a common requirement for both use cases.

The record stage provides recording, archiving, and on-demand viewing support. Different use cases, such as telematics, drones, robotics, and healthcare, have different needs for recording and archiving, including aspects like resolutions and compliance with laws for record-keeping.

The Analyze stage is critical in the present context since AI is at the center stage of everything we do. This is where computational needs are important, and architecting becomes challenging. Partitioning AI implementation between Edge and Cloud is critical. The sustenance stage involves implementing orchestration that allows for ease of use, ease of deployment, enhancements, optimizing AI models for additional datasets, etc.

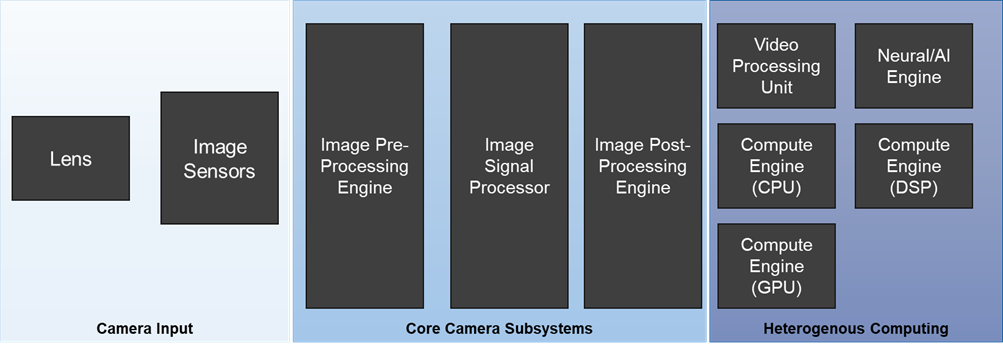

The camera subsystem is one of the most critical parts of a machine vision solution. It comprises three main elements:

- Camera Input Subsystem

- Core Camera Subsystems

- Heterogenous Computing Subsystem

The camera input subsystem consists of the lens and image sensor. Depending on the end application, there can be one or more image sensors used. Robotics applications utilize different types of image sensors (RGB, Time of Flight, LiDAR, etc.) for performing sensor fusion. Healthcare equipment meant for personal diagnosis may have one or more types of image sensors to identify anomalies or take readings. For security and surveillance applications, commonly used image sensors are RGB and IR.

The selection of the lens and image sensor is heavily dependent on the use case. Security and surveillance applications require higher pixel density and larger pixel size-based sensors compared to sensors found in cellular phones, which do not have the same need. Similarly, inspection systems need either line scan or area scan type sensors that are more appropriate for such applications.

- “Optics” ensures that the camera captures the objects you are actually interested in.

- “Lighting Conditions, Dynamic Range” aims to preserve the relative intensity between the brightest and darkest details within the image and provide this information to the vision algorithms as much as possible.

- “Type of target” – Rolling shutter for use-cases that do not involve motion capture, and Global shutter for use-cases that involve motion capture.

- “FoV” – Use-cases like retail analytics where you can capture a larger dataset (of people) entering/exiting the store.

The core camera subsystem consists of pre-processing, image signal processing, and post-processing blocks. These are critical for improving image quality to be used by subsequent subsystems for analytics and decision making.

The heterogeneous computing subsystem focuses on computational needs based on the target use case. Heavy AI models may require a powerful AI engine with inference using GPU, DSP, and CPU. When performing face detection for security/surveillance use cases, it’s essential to run the model on either of these computational engines based on system load and computation requirements for better inferencing. Key considerations for design include the selection of CPU core, GPU, DSP, and associated KPIs – Floating-point processing, timing latency, etc., as they impact outcomes. AI engines are commonly measured in TOPS, which is a highly advertised method of measuring performance, but the most important metric for AI engine performance is inference per second or inference latency.

With vast experience and in-depth expertise in designing custom camera solutions, eInfochips has helped OEMs stay ahead of the technology curve. In collaboration with Arrow and Qualcomm, eInfochips has set up “Edge Labs” – a Center of Excellence that offers Qualcomm Subject Matter Experts (SMEs), end-to-end product development services, a state-of-the-art lab, and equipment to accelerate product development. eInfochips has designed 30+ camera designs, leveraging an in-house state-of-the-art image tuning lab. They also have experience with the latest AI frameworks and tools (Tensorflow, OpenCV, Python, Caffe, Keras). Additionally, eInfochips has developed a Reusable Camera Framework (RCF) to accelerate time-to-market and significantly reduce development efforts, providing a proven, tested feature set.

For more information, please reach out to marketing@einfochips.com